|

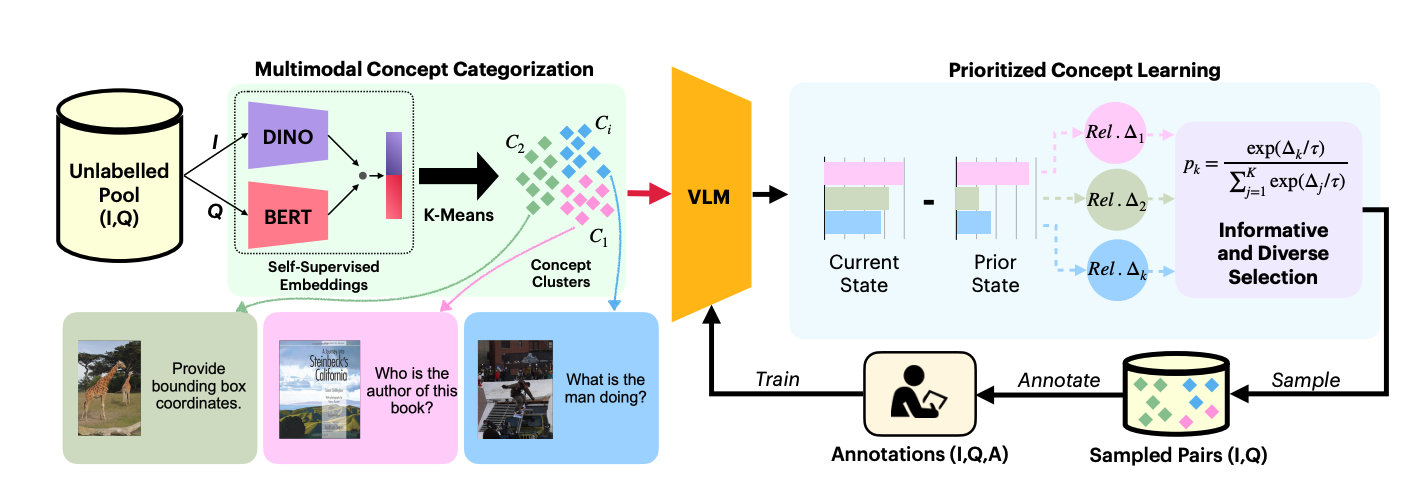

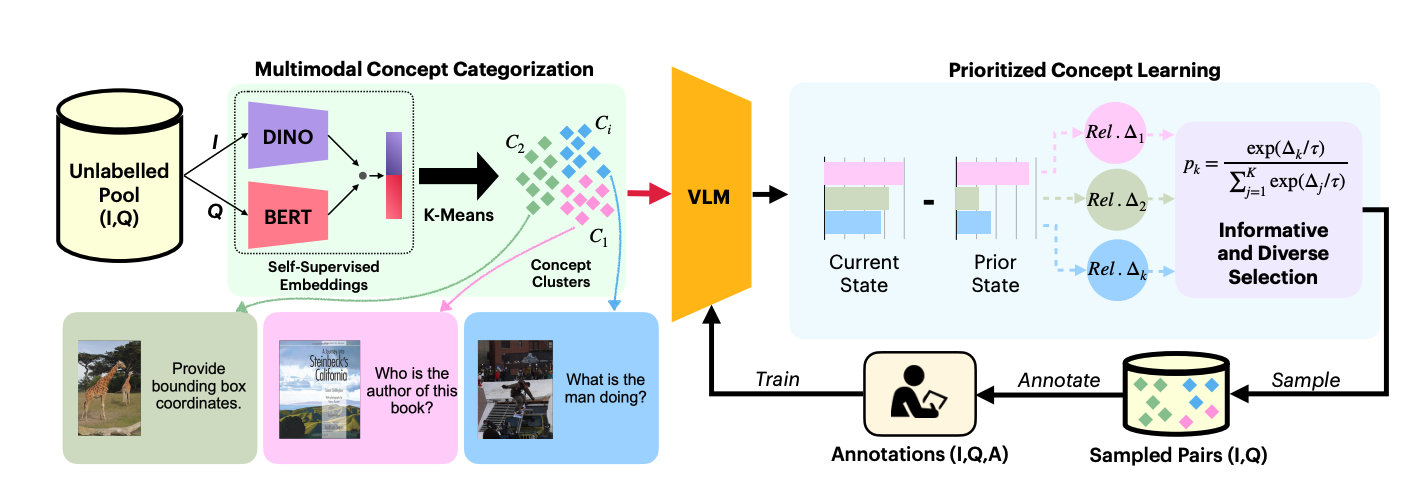

Learning What Matters: Prioritized Concept Learning via Relative Error-driven Sample Selection

Shivam Chandhok*, Qian Yang*, Oscar Manas, Kanishk Jain, Leonid Sigal^, Aishwarya Agrawal^

*equal first author contribution

^equal last author contribution

ArXiv preprint, arXiv:2506.01085, 2025

[ArXiv]

|

|

The Promise of RL for Autoregressive Image Editing

Saba Ahmadi*, Rabiul Awal*, Ankur Sikarwar*, Amirhossein Kazemnejad*, Ge Ya Luo, Juan A. Rodriguez, Sai Rajeswar, Siva Reddy, Christopher Pal, Benno Krojer, Aishwarya Agrawal

*equal first author contribution

NeurIPS 2025

[ArXiv]

|

|

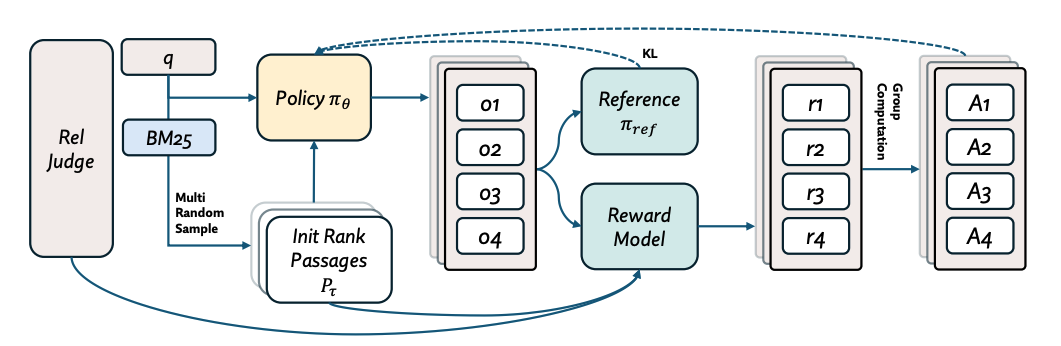

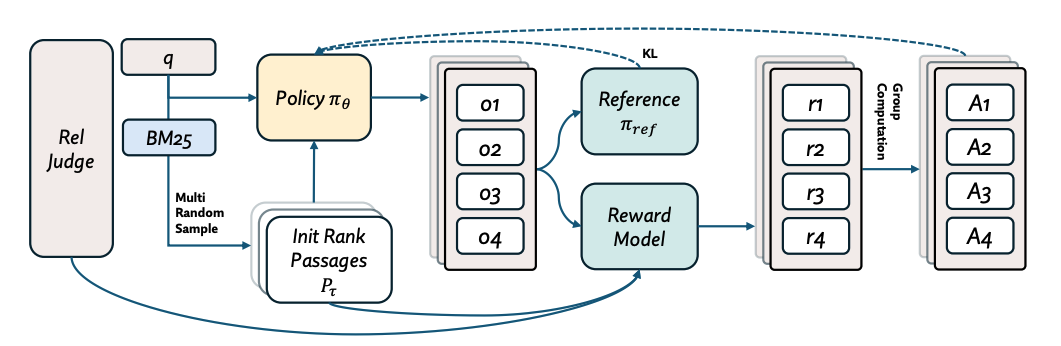

REARANK: Reasoning Re-ranking Agent via Reinforcement Learning

Le Zhang, Bo Wang, Xipeng Qiu, Siva Reddy, Aishwarya Agrawal

EMNLP 2025

[ArXiv]

|

|

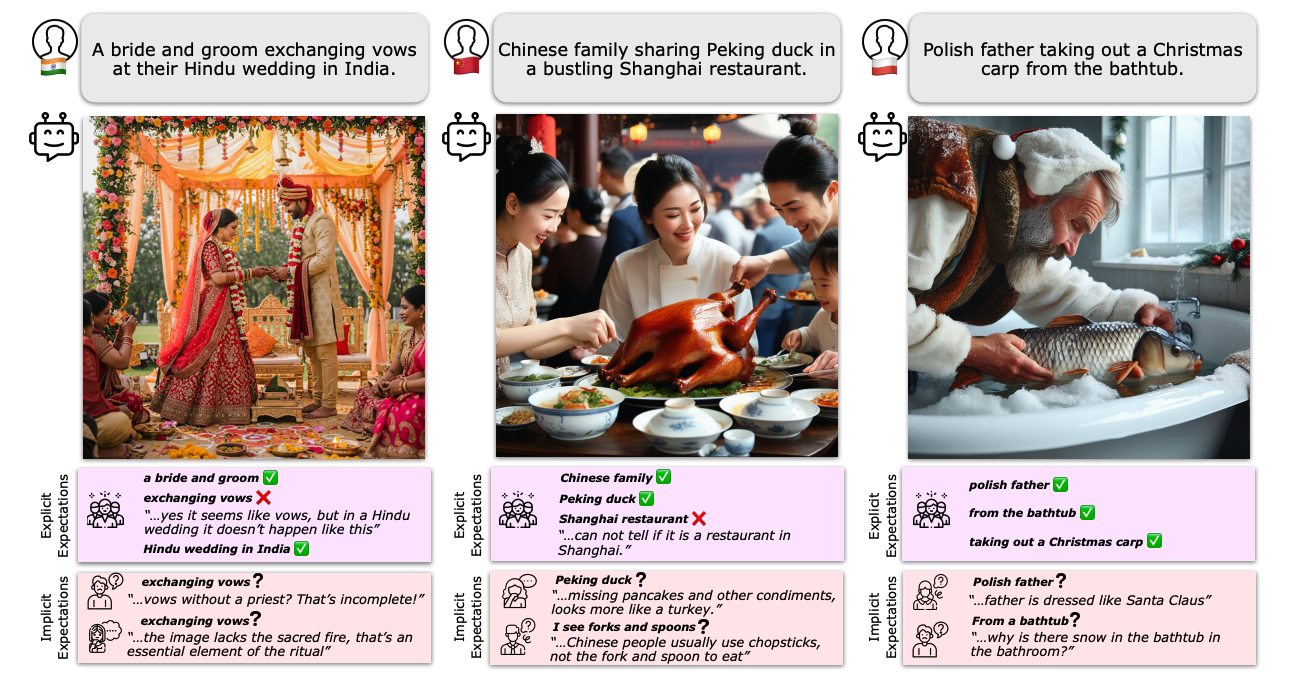

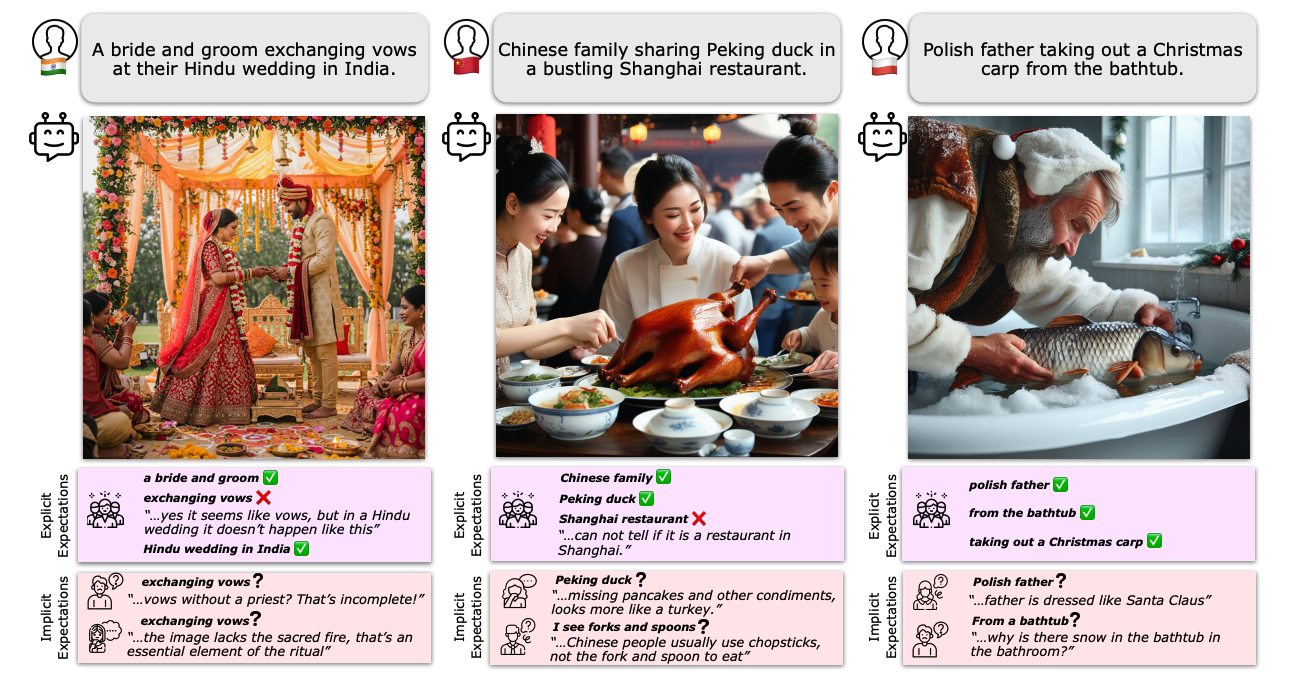

CulturalFrames: Assessing Cultural Expectation Alignment in Text-to-Image Models and Evaluation Metrics

Shravan Nayak, Mehar Bhatia, Xiaofeng Zhang, Verena Rieser, Lisa Anne Hendricks, Sjoerd van Steenkiste, Yash Goyal, Karolina Stańczak,, Aishwarya Agrawal

EMNLP Findings 2025

[ArXiv]

|

|

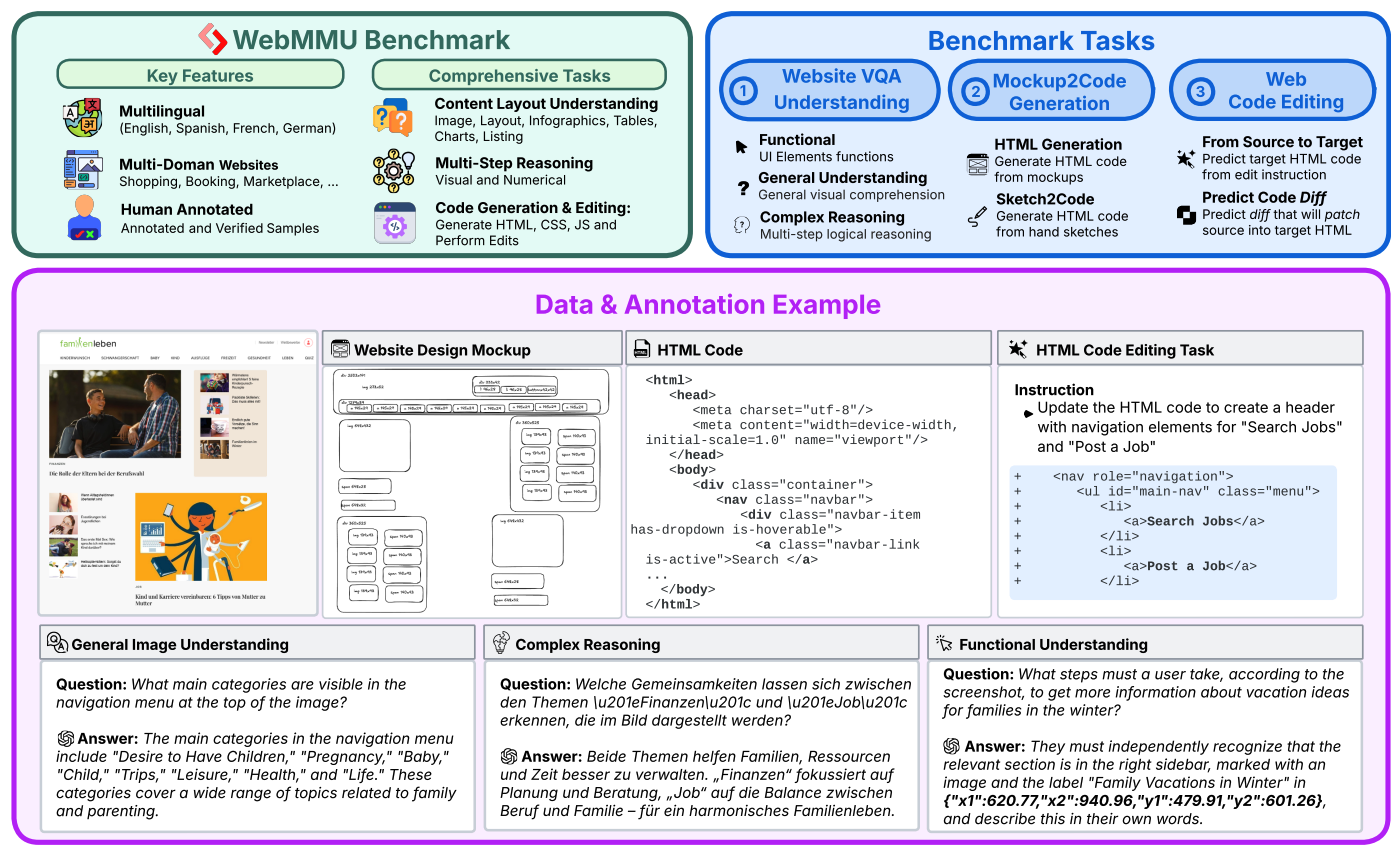

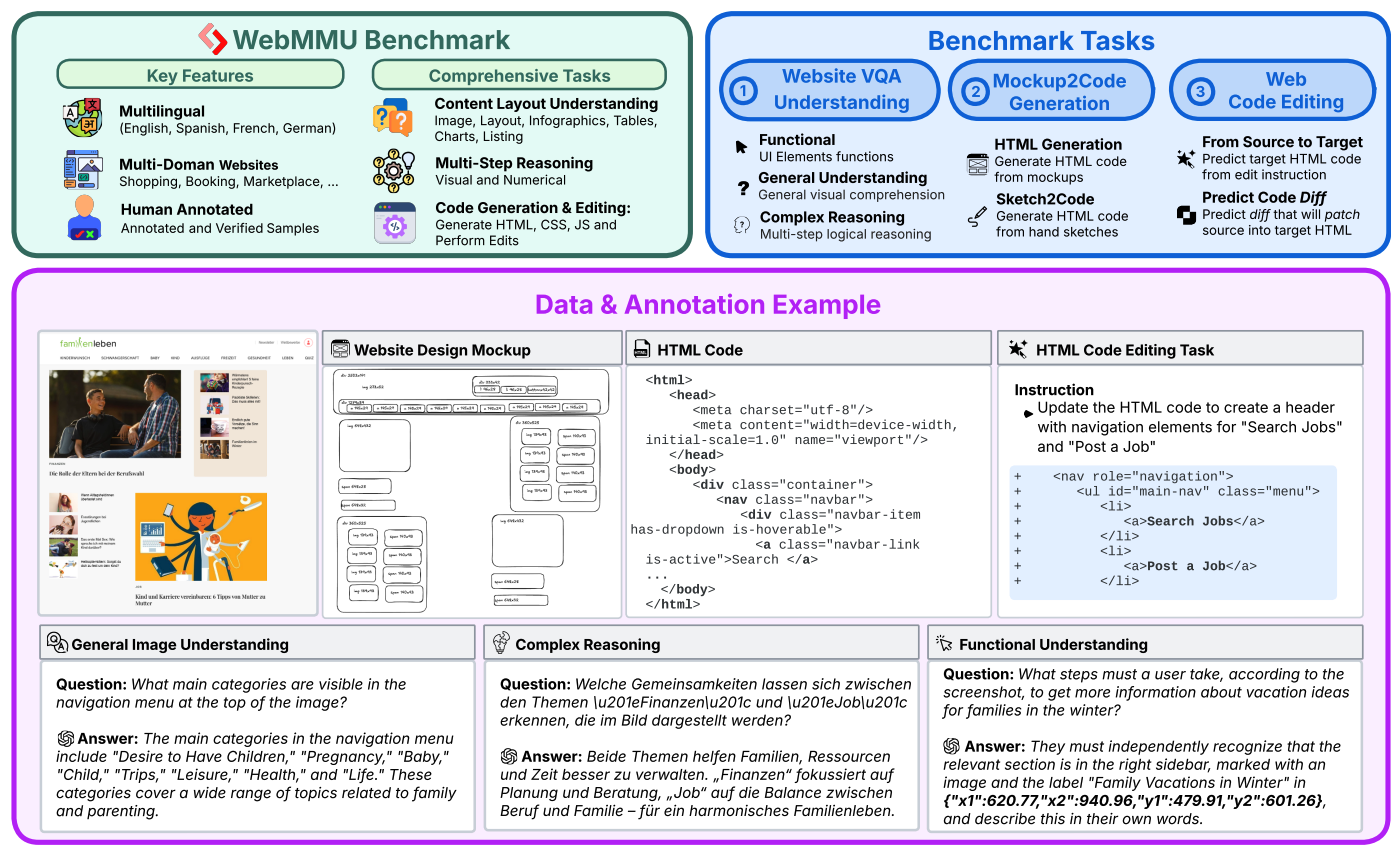

WebMMU: A Benchmark for Multimodal Multilingual Website Understanding and Code Generation

Rabiul Awal*, Mahsa Massoud*, Aarash Feizi^, Zichao Li^, Suyuchen Wang, Christopher Pal, Aishwarya Agrawal, David Vazquez, Siva Reddy, Juan A. Rodriguez, Perouz Taslakian, Spandana Gella, Sai Rajeswar

*co-first authors

^co-second authors

EMNLP 2025

[ArXiv]

|

|

Controlling Multimodal LLMs via Reward-guided Decoding

Oscar Mañas, Pierluca D'Oro, Koustuv Sinha, Adriana Romero-Soriano, Michal Drozdzal, Aishwarya Agrawal

ICCV 2025

[ArXiv]

|

|

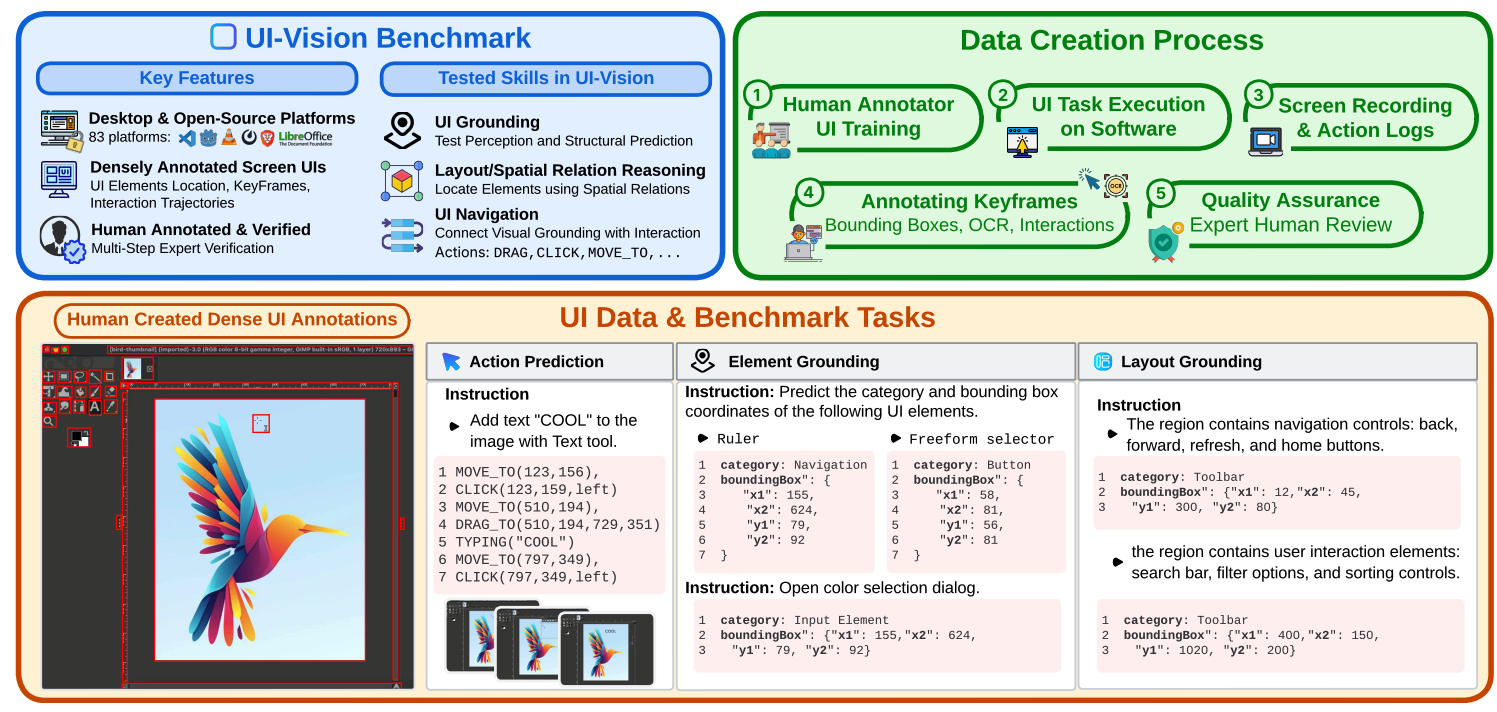

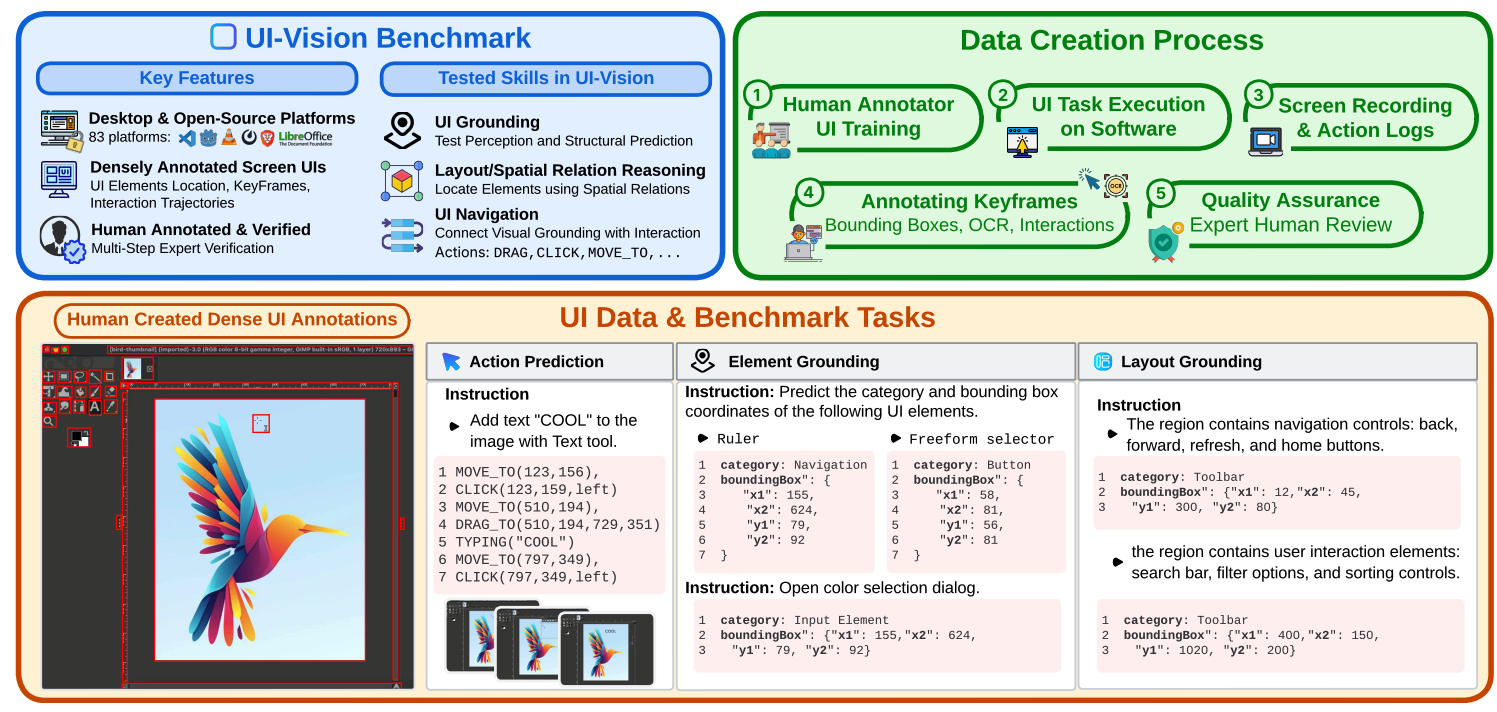

UI-Vision: A Desktop-centric GUI Benchmark for Visual Perception and Interaction

Shravan Nayak*, Xiangru Jian*, Kevin Qinghong Lin, Juan A. Rodriguez, Montek Kalsi, Rabiul Awal, Nicolas Chapados, M. Tamer Özsu, Aishwarya Agrawal, David Vazquez, Christopher Pal, Perouz Taslakian, Spandana Gella, Sai Rajeswar

*equal first author contribution

ICML 2025

[ArXiv]

|

|

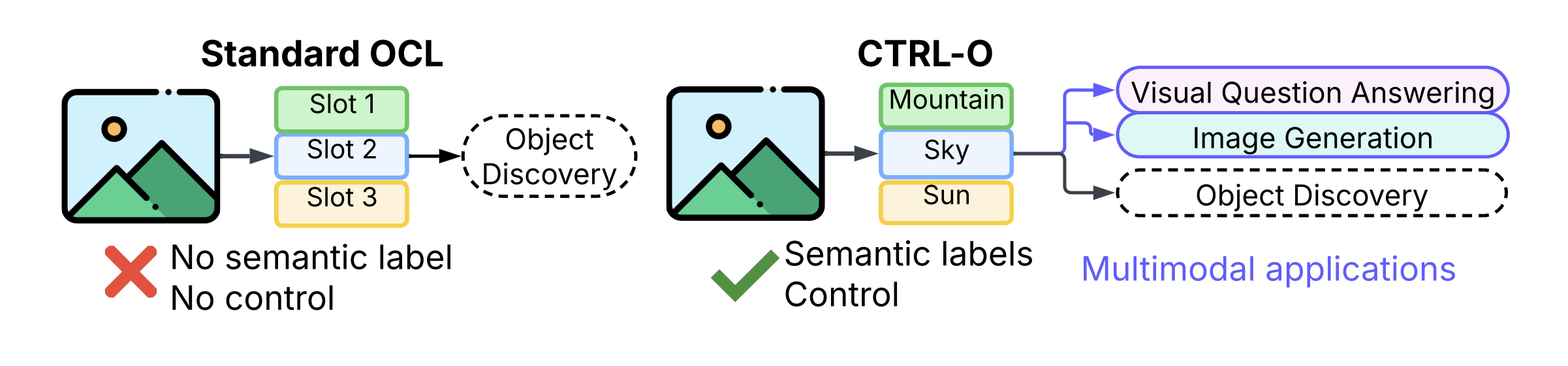

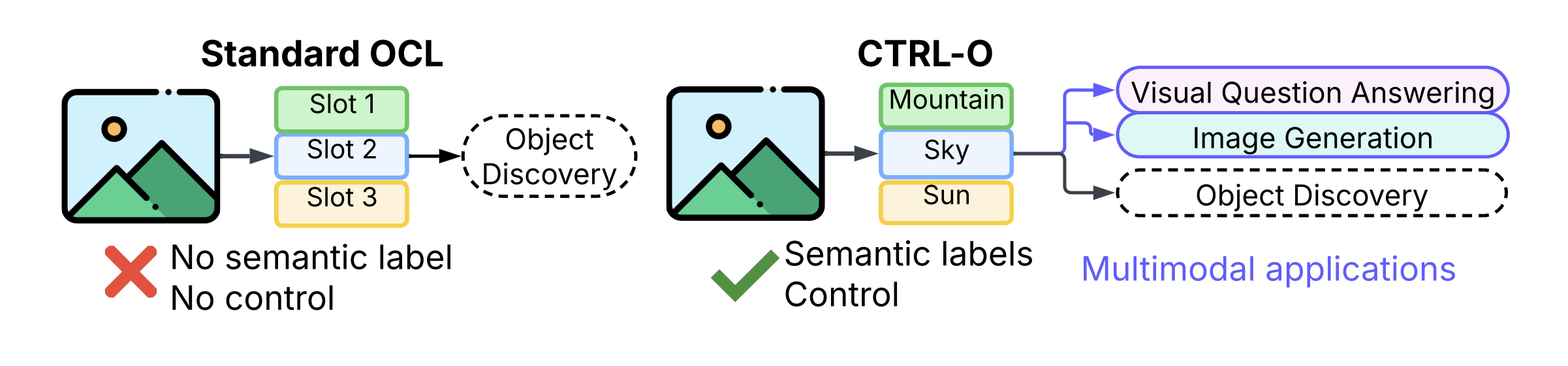

CTRL-O: Language-Controllable Object-Centric Visual Representation Learning

Aniket Didolkar*,

Andrii Zadaianchuk*^,

Rabiul Awal*,

Maximilian Seitzer,

Efstratios Gavves,

Aishwarya Agrawal^

*equal first author contribution

^equal last author contribution

CVPR 2025

[ArXiv]

|

|

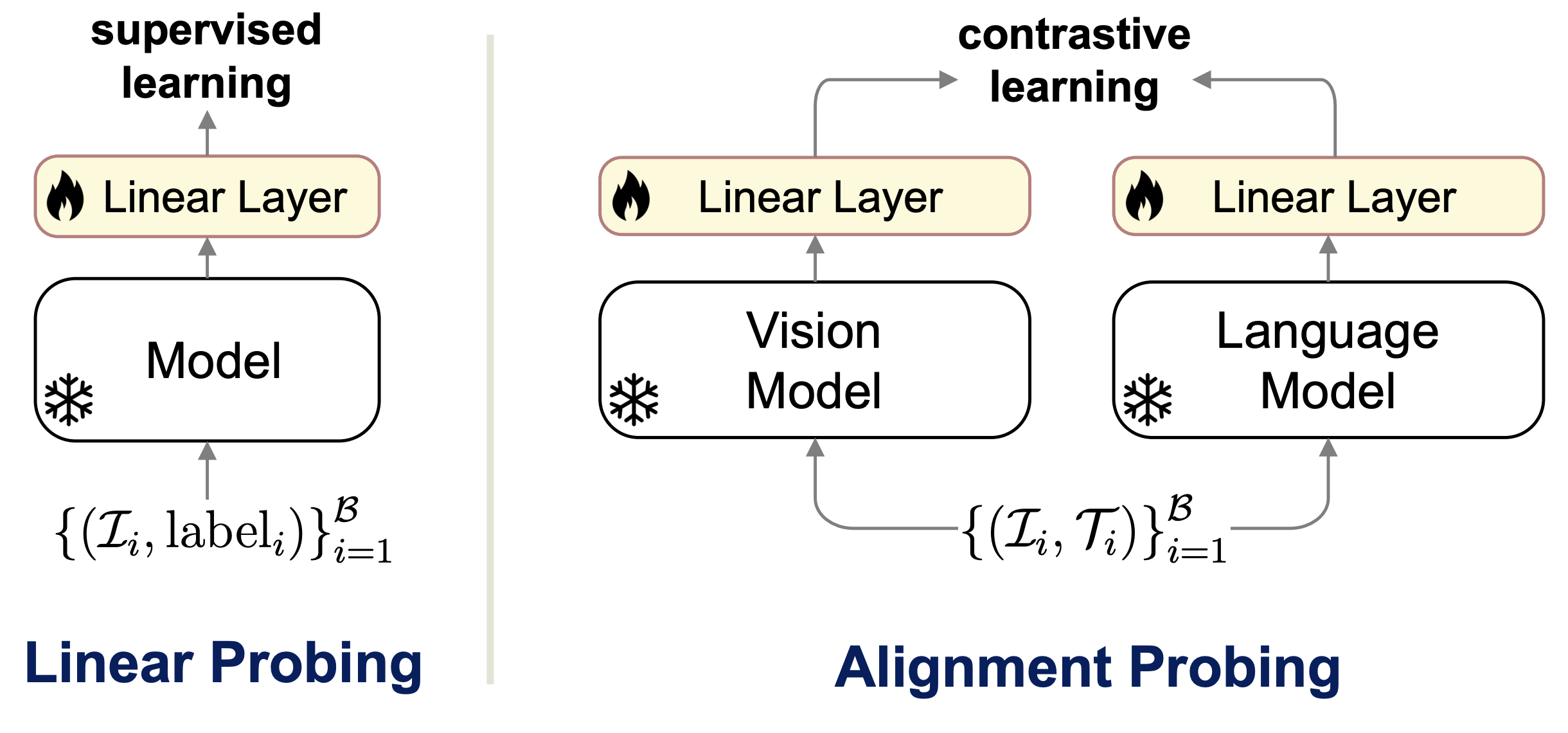

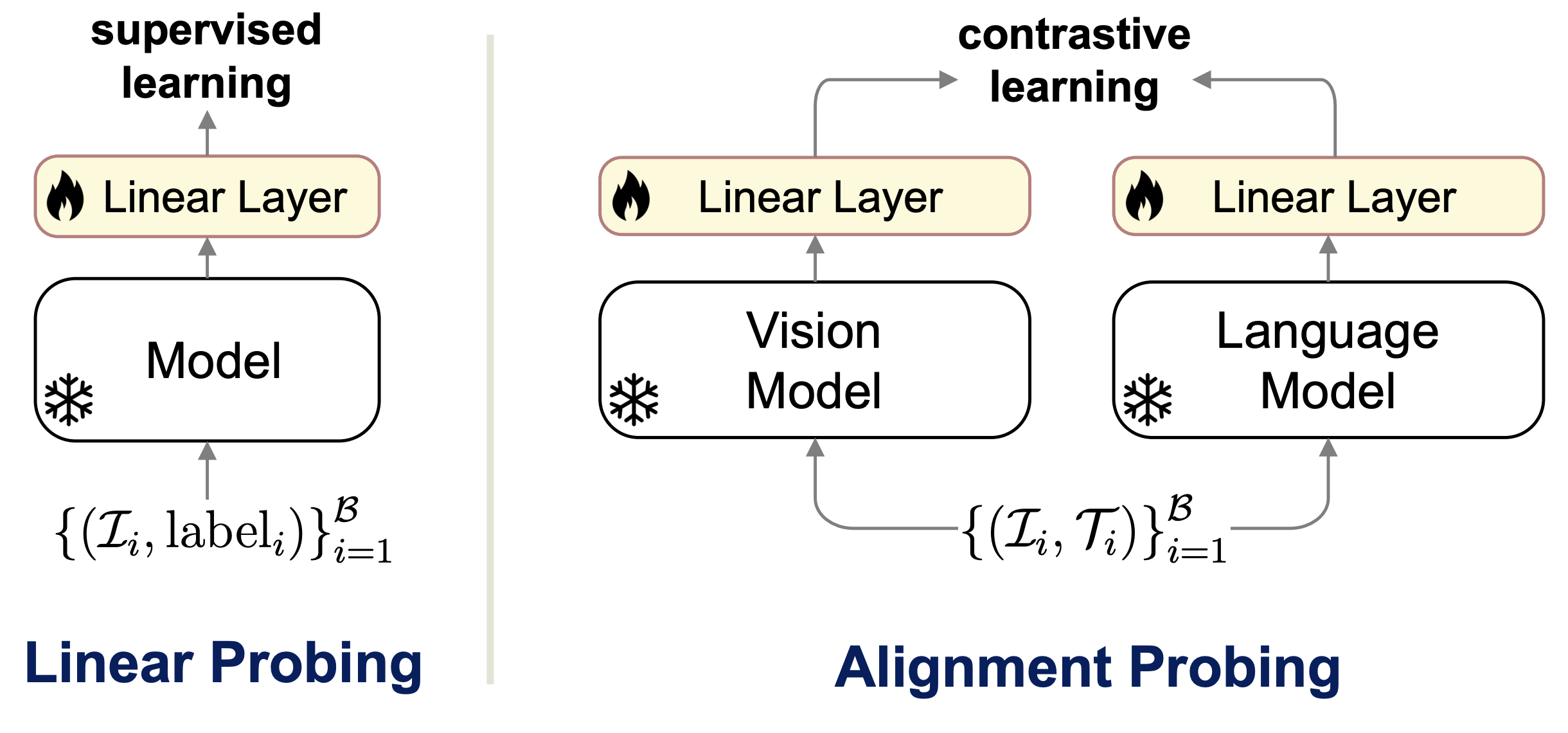

Assessing and Learning Alignment of Unimodal Vision and Language Models

Le Zhang,

Qian Yang,

Aishwarya Agrawal

CVPR 2025 [Highlight Poster]

[ArXiv]

|

|

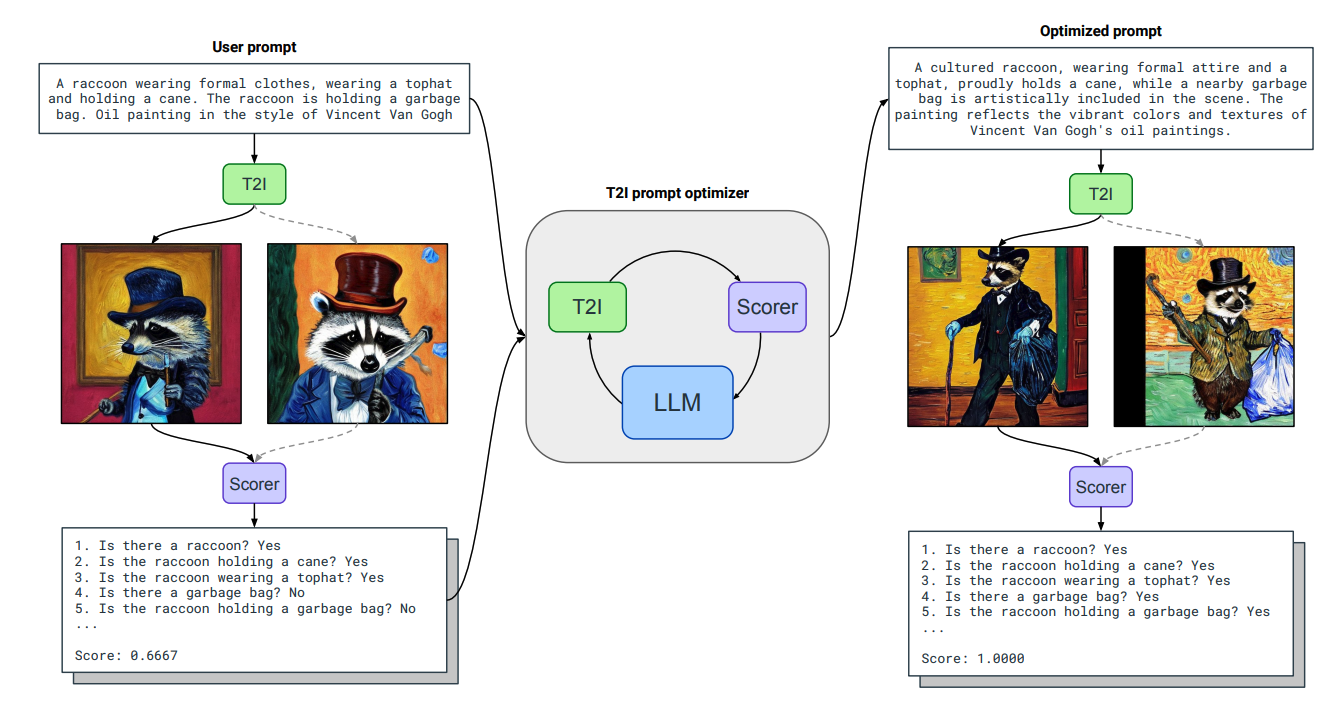

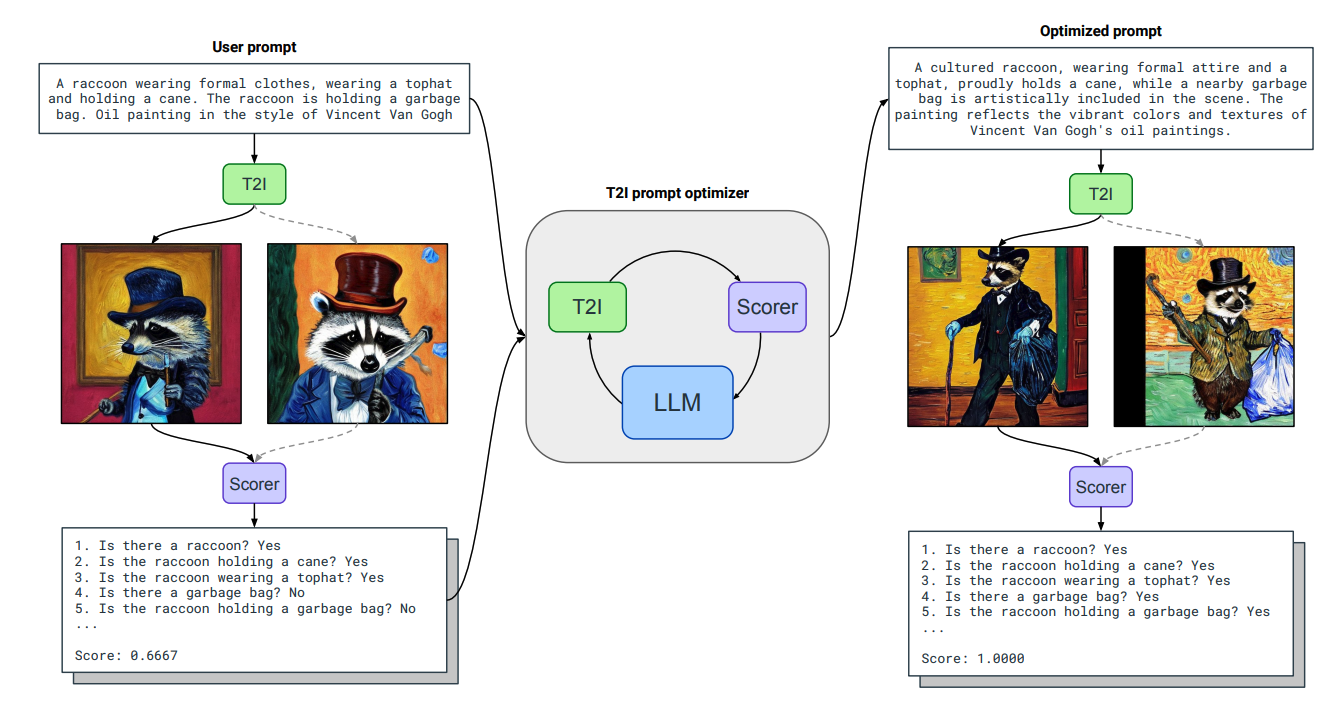

Improving Text-to-Image Consistency via Automatic Prompt Optimization

Oscar Mañas,

Pietro Astolfi,

Melissa Hall,

Candace Ross,

Jack Urbanek,

Adina Williams,

Aishwarya Agrawal,

Adriana Romero-Soriano,

Michal Drozdzal

TMLR 2024 [Featured Certification]

[ArXiv]

|

|

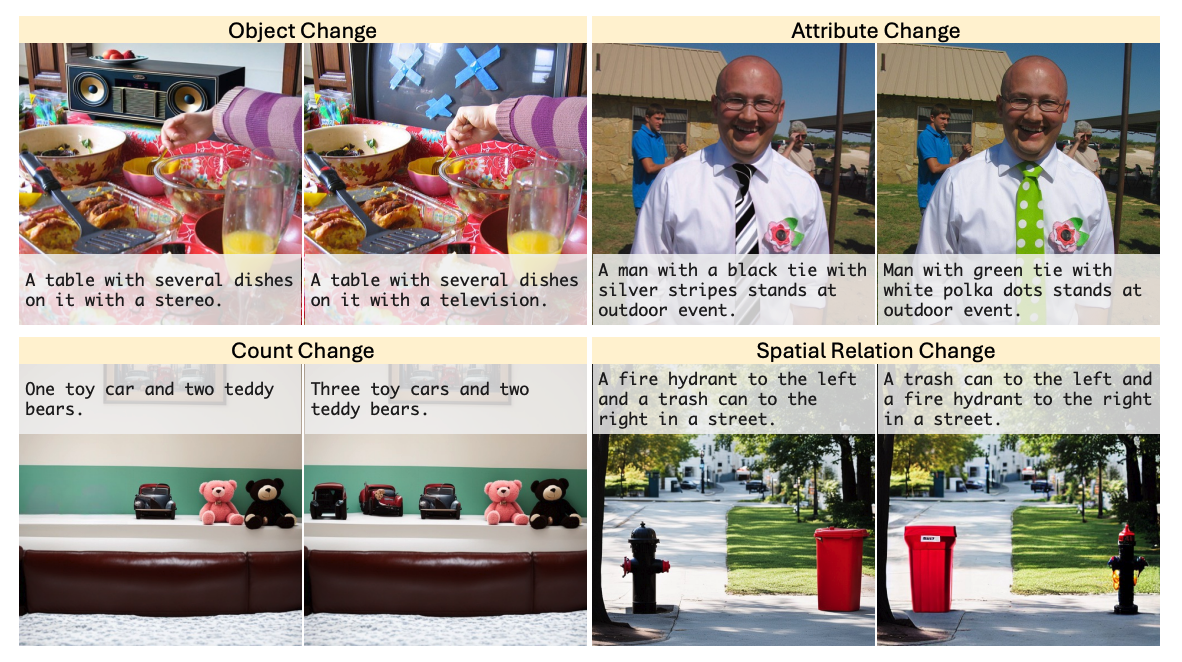

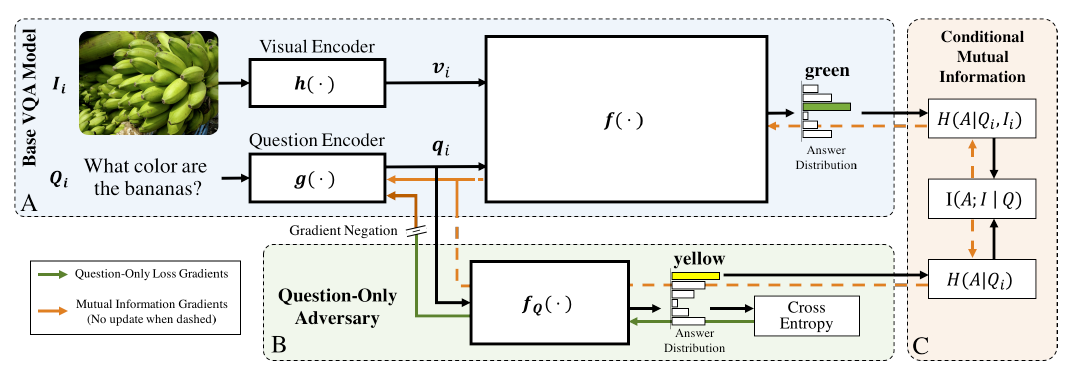

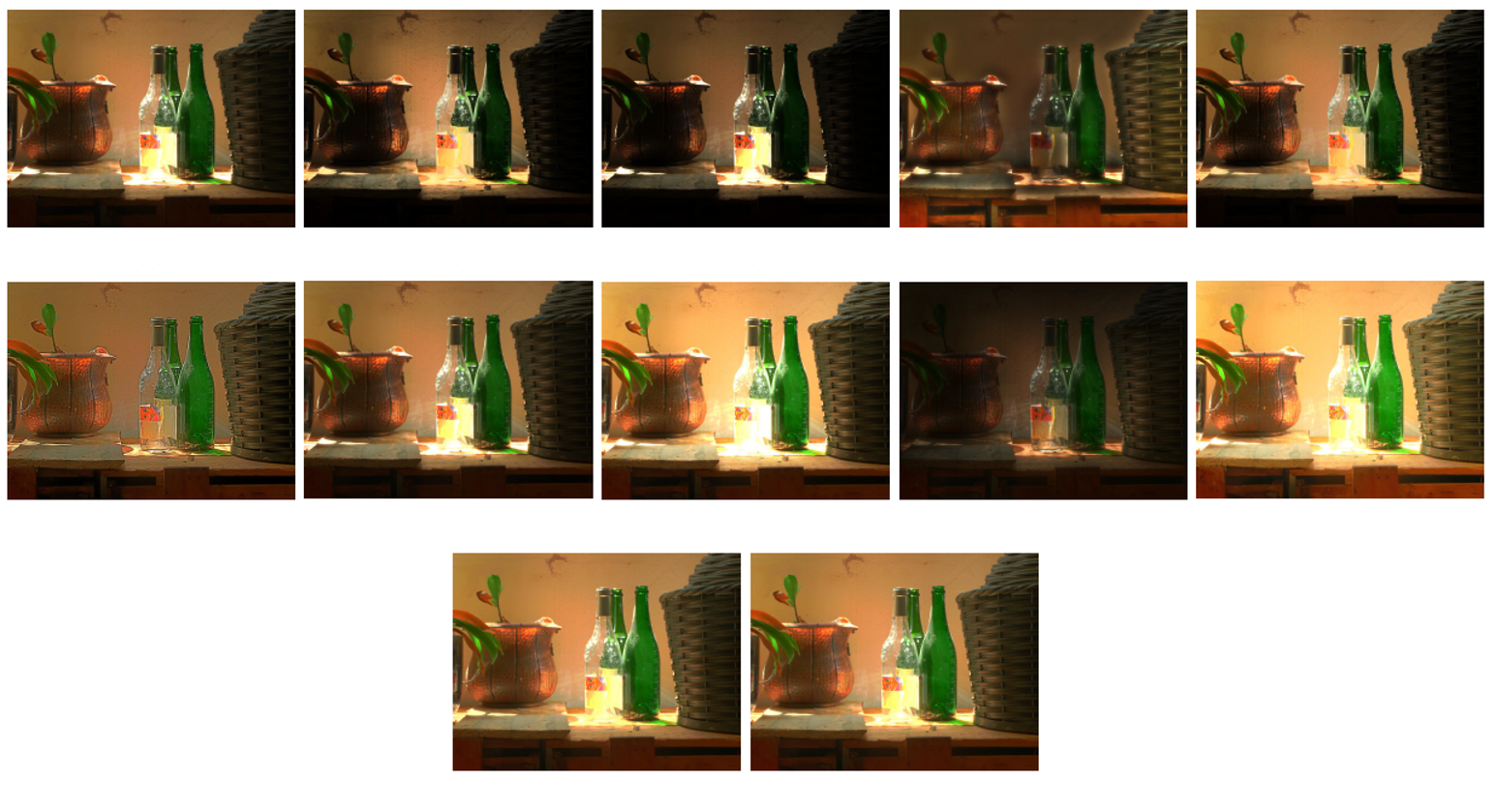

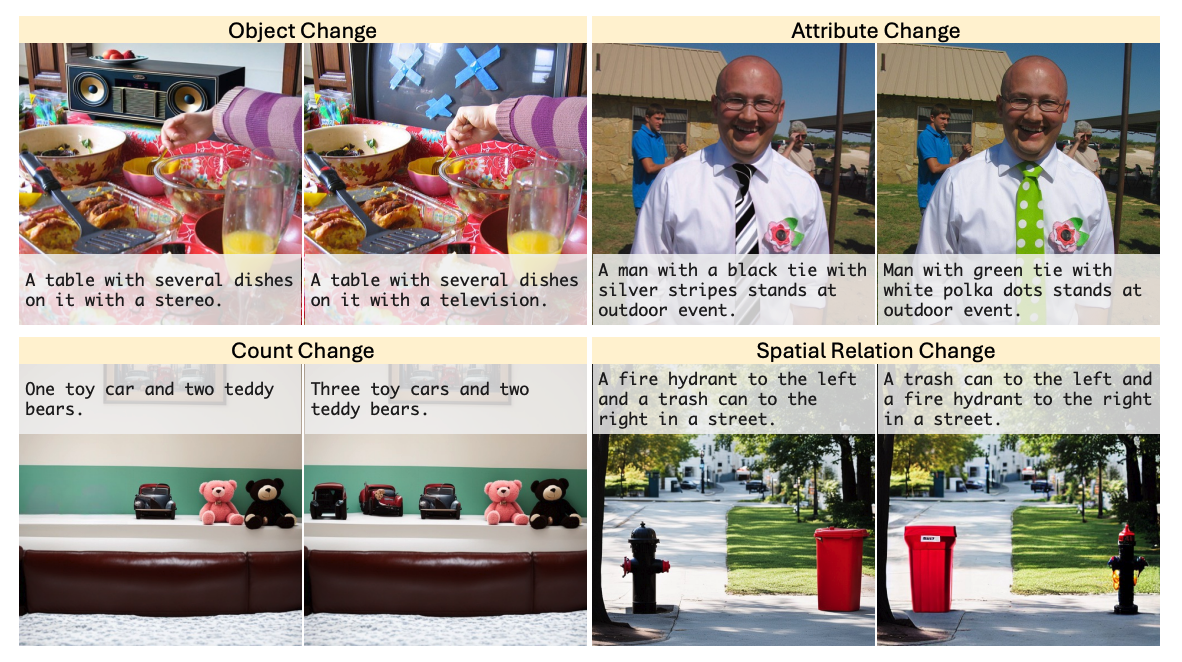

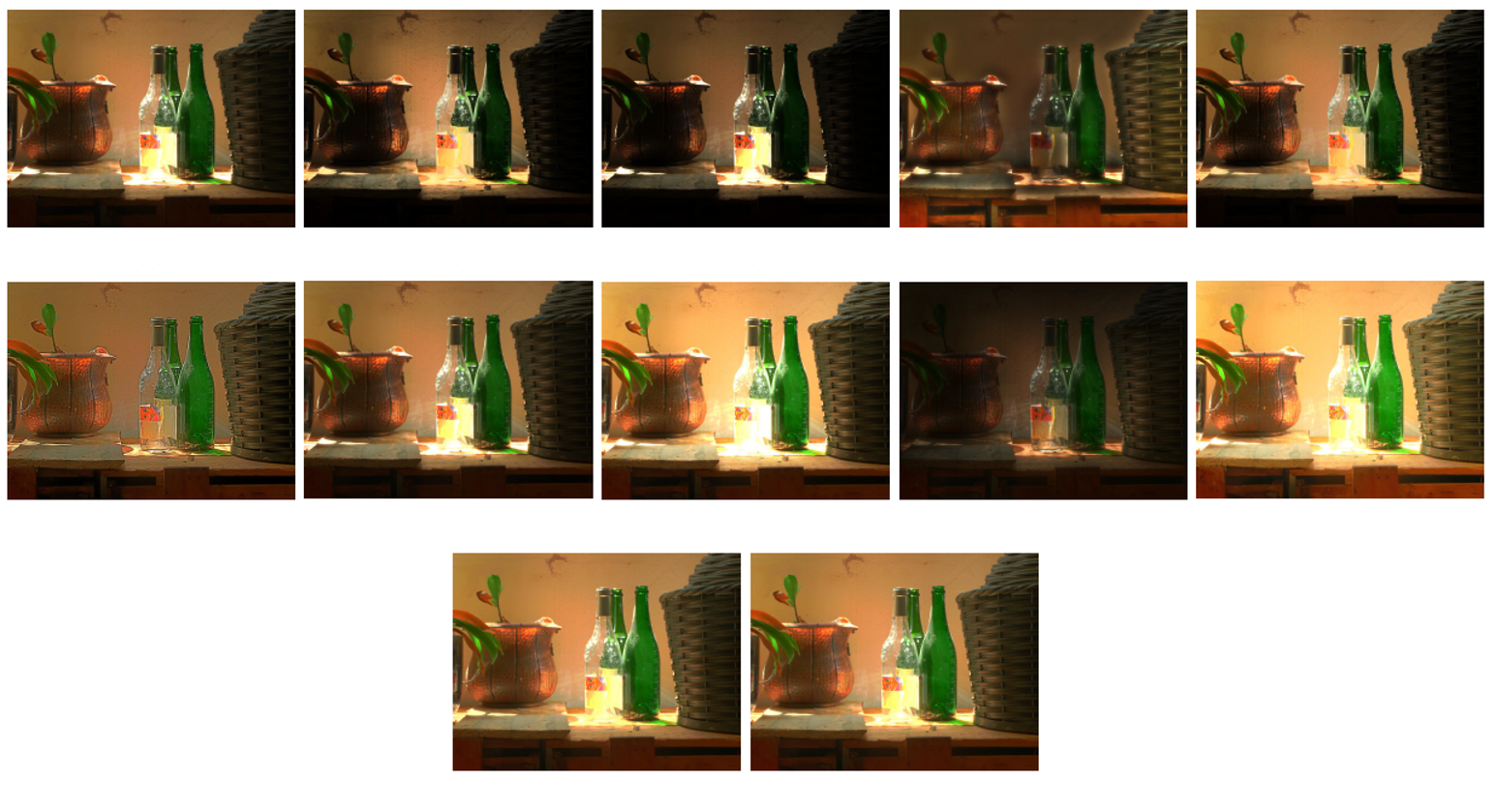

VisMin: Visual Minimal-Change Understanding

Rabiul Awal*,

Saba Ahmadi*,

Le Zhang*,

Aishwarya Agrawal

*equal contribution

NeurIPS 2024

[ArXiv]

|

|

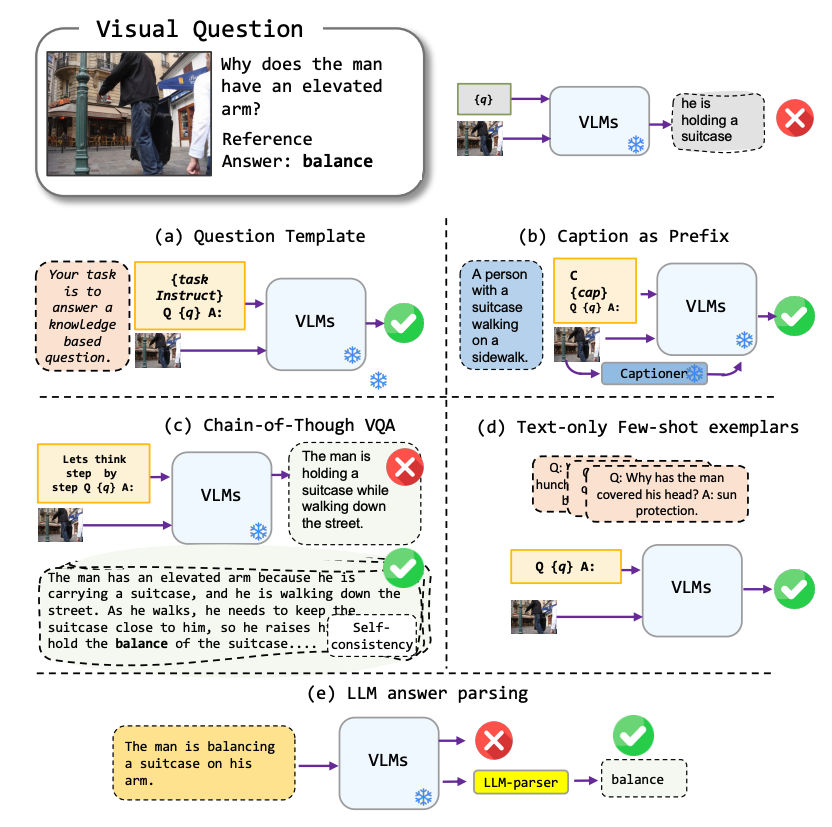

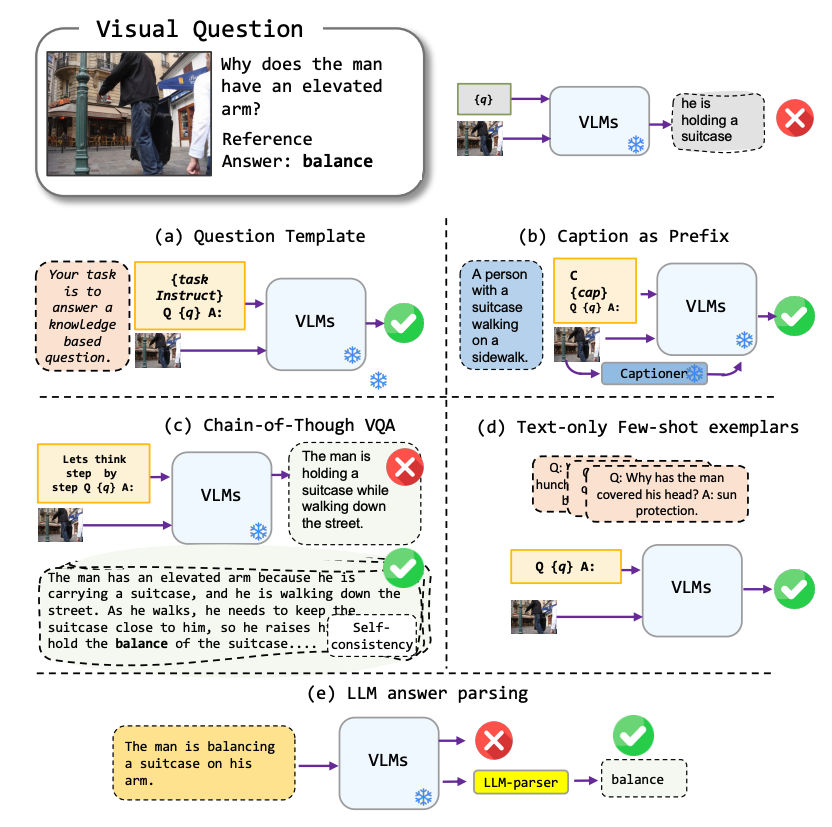

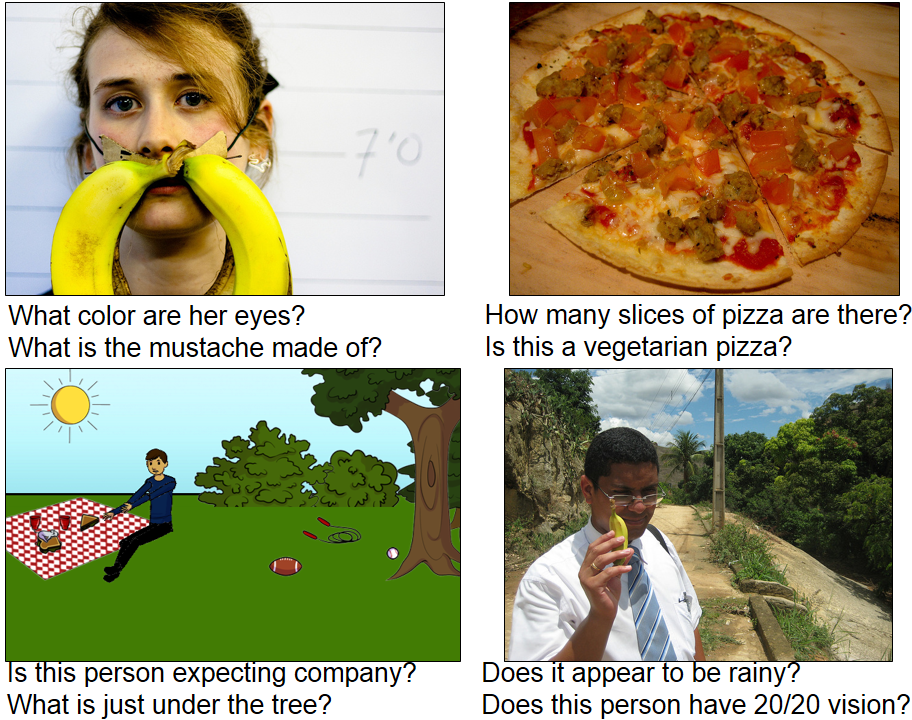

Investigating Prompting Techniques for Zero- and Few-Shot Visual Question Answering

Rabiul Awal,

Le Zhang,

Aishwarya Agrawal

Multimodal Algorithmic Reasoning Workshop, NeurIPS 2024

[ArXiv]

|

|

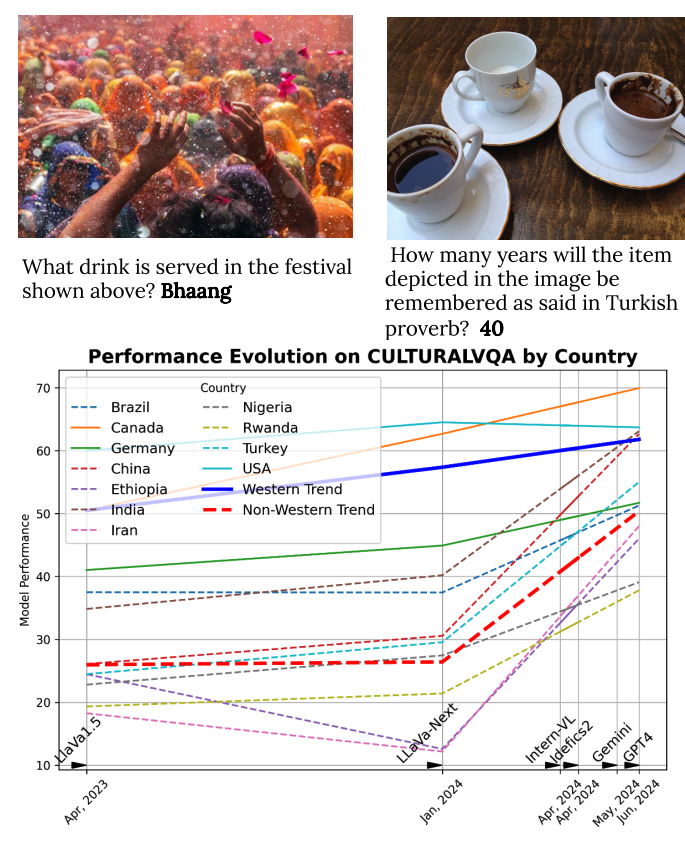

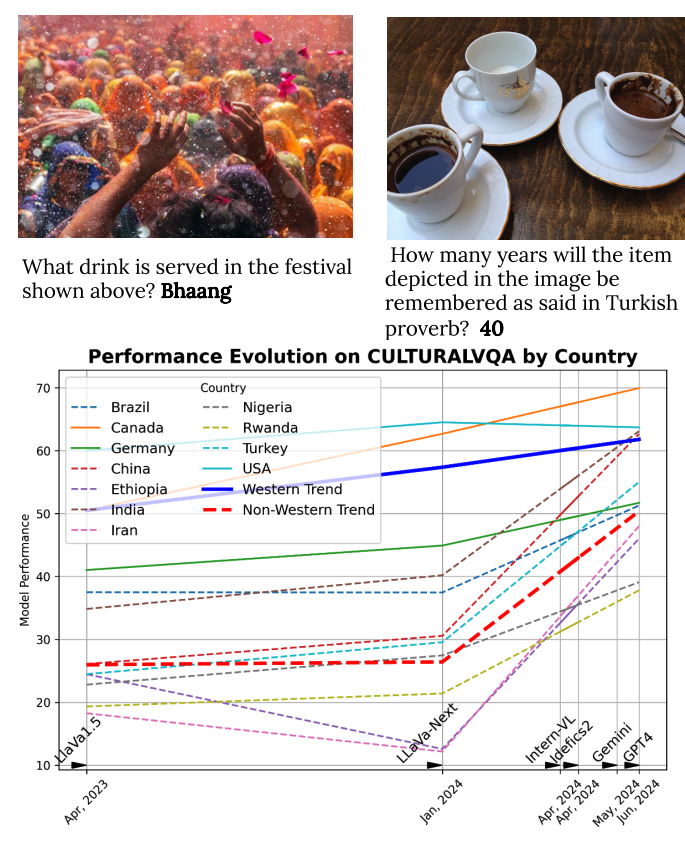

Benchmarking Vision Language Models for Cultural Understanding

Shravan Nayak,

Kanishk Jain,

Rabiul Awal,

Siva Reddy,

Sjoerd van Steenkiste,

Lisa Anne Hendricks,

Karolina Stanczak,

Aishwarya Agrawal

EMNLP 2024 [Oral]

[ArXiv]

|

|

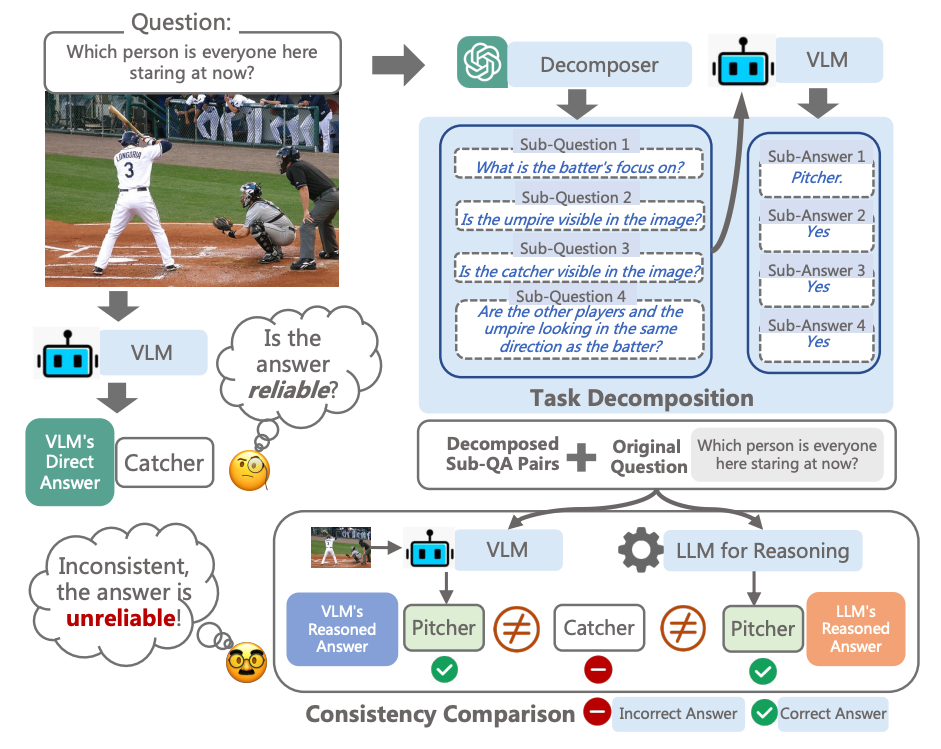

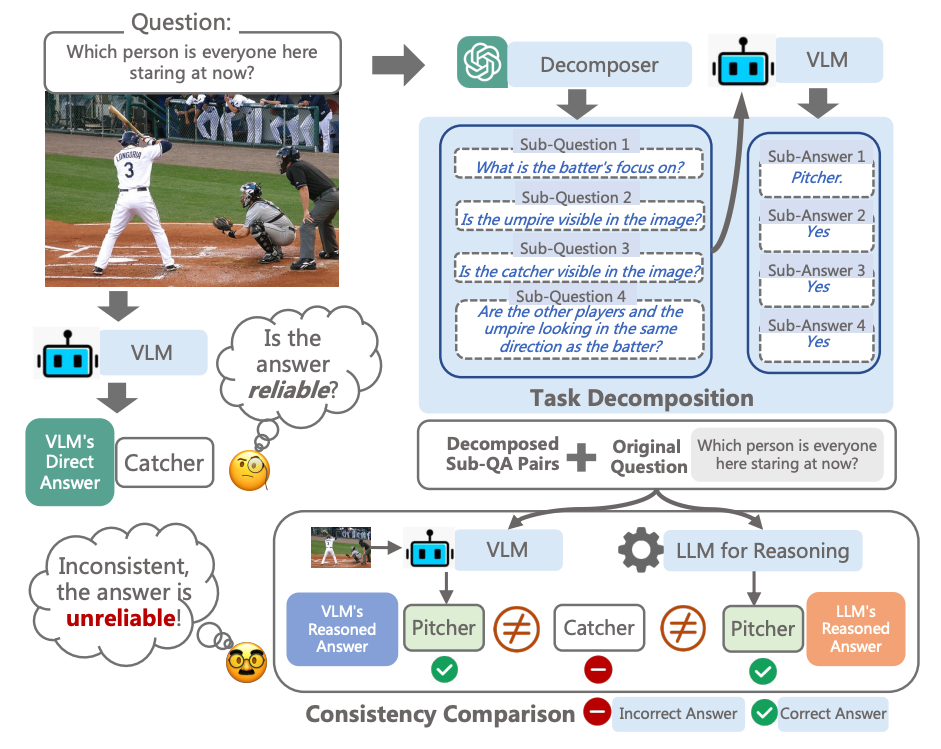

Decompose and Compare Consistency: Measuring VLMs’ Answer Reliability via Task-Decomposition Consistency Comparison

Qian Yang,

Weixiang Yan,

Aishwarya Agrawal

EMNLP 2024

[ArXiv]

|

|

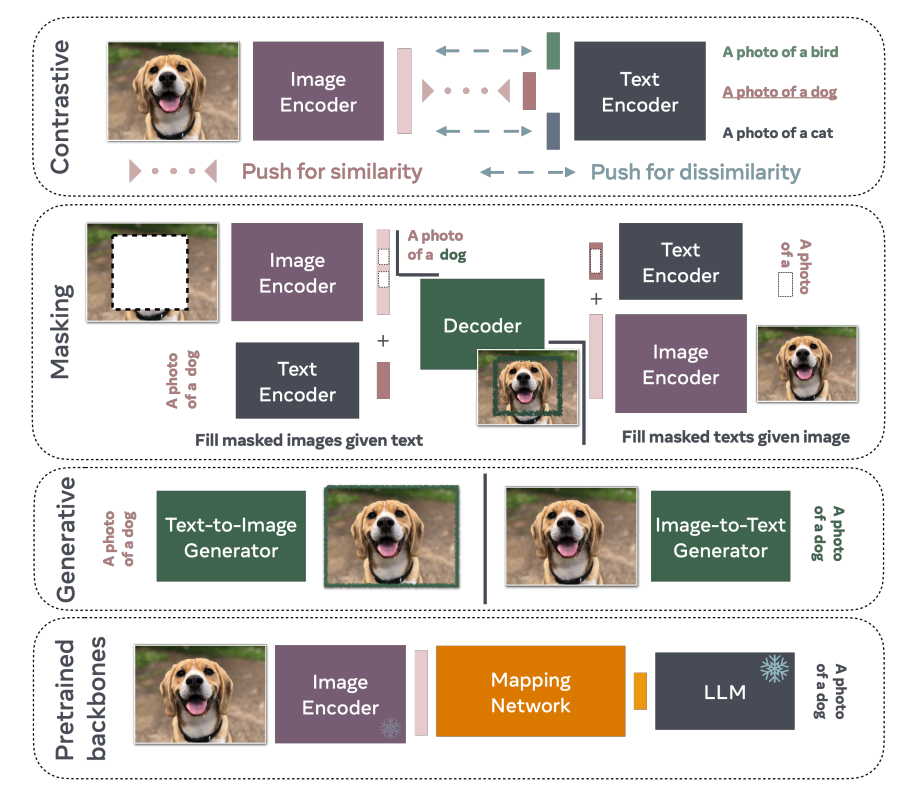

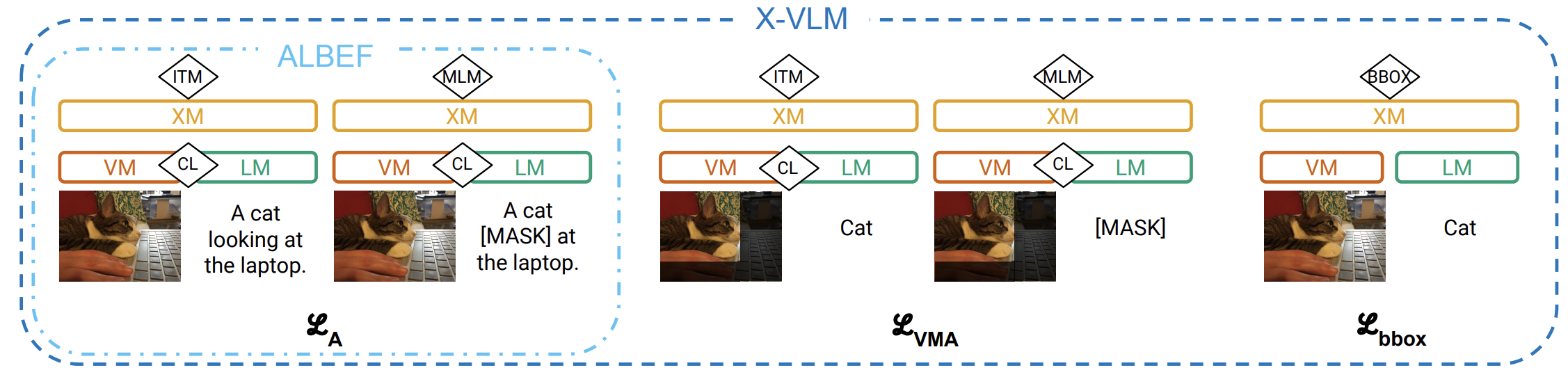

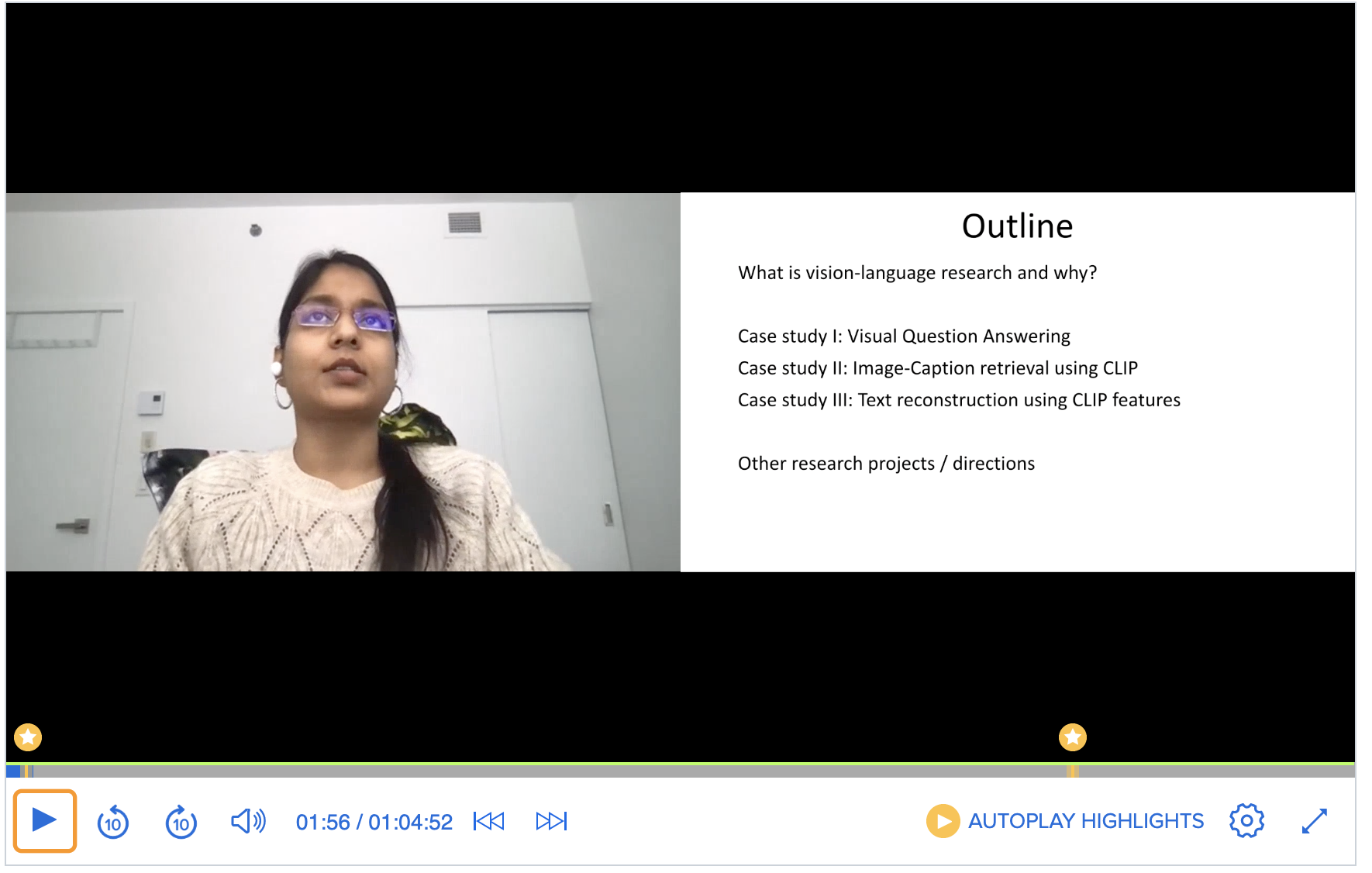

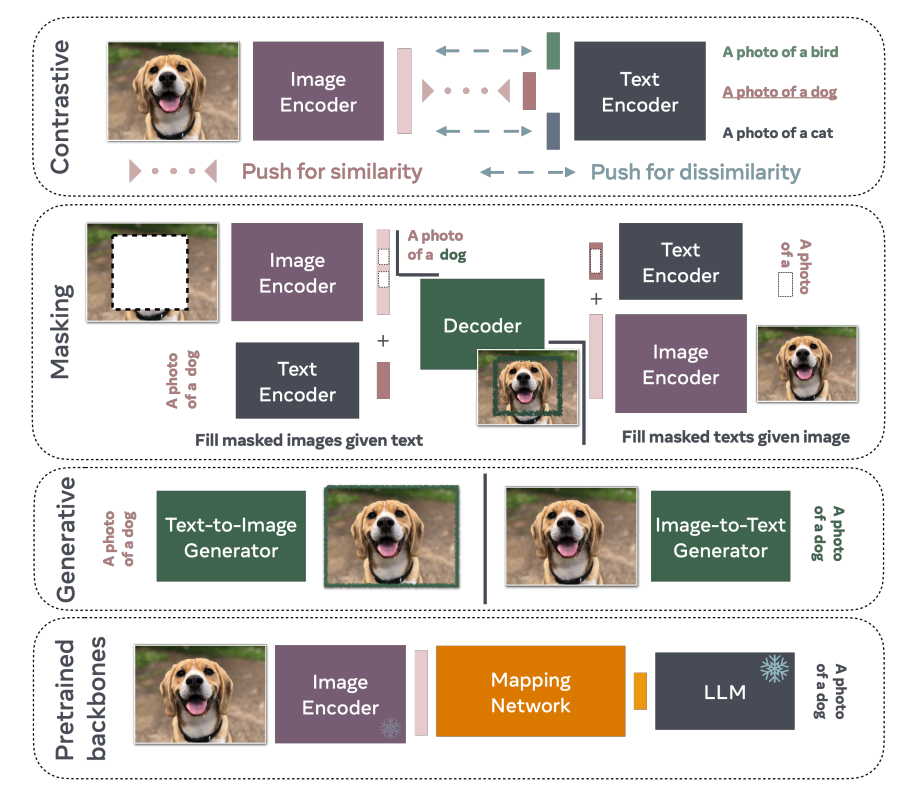

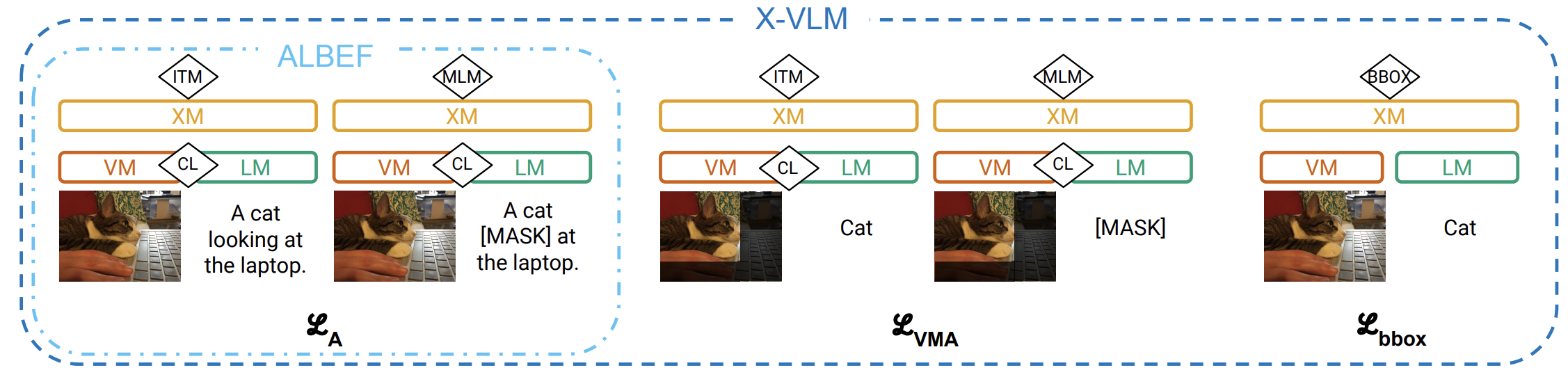

An Introduction to Vision-Language Modeling

Florian Bordes et al.

arXiv preprint, arXiv:2405.17247, 2024

[ArXiv]

|

|

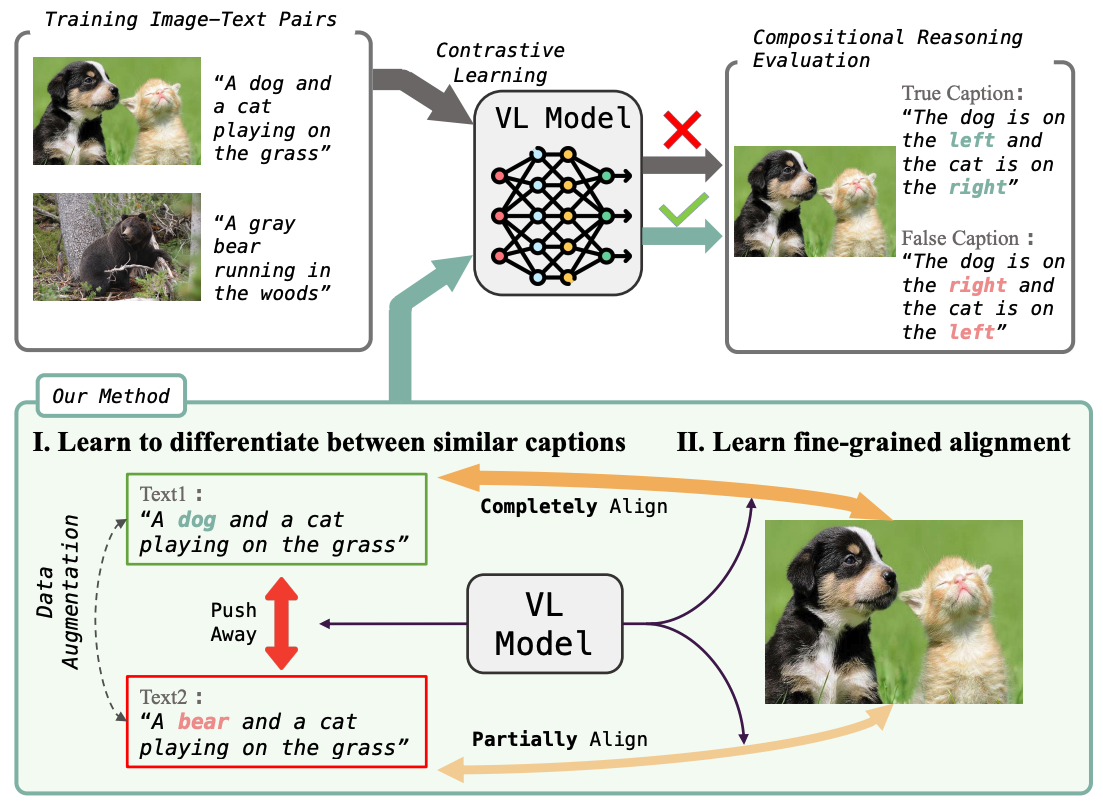

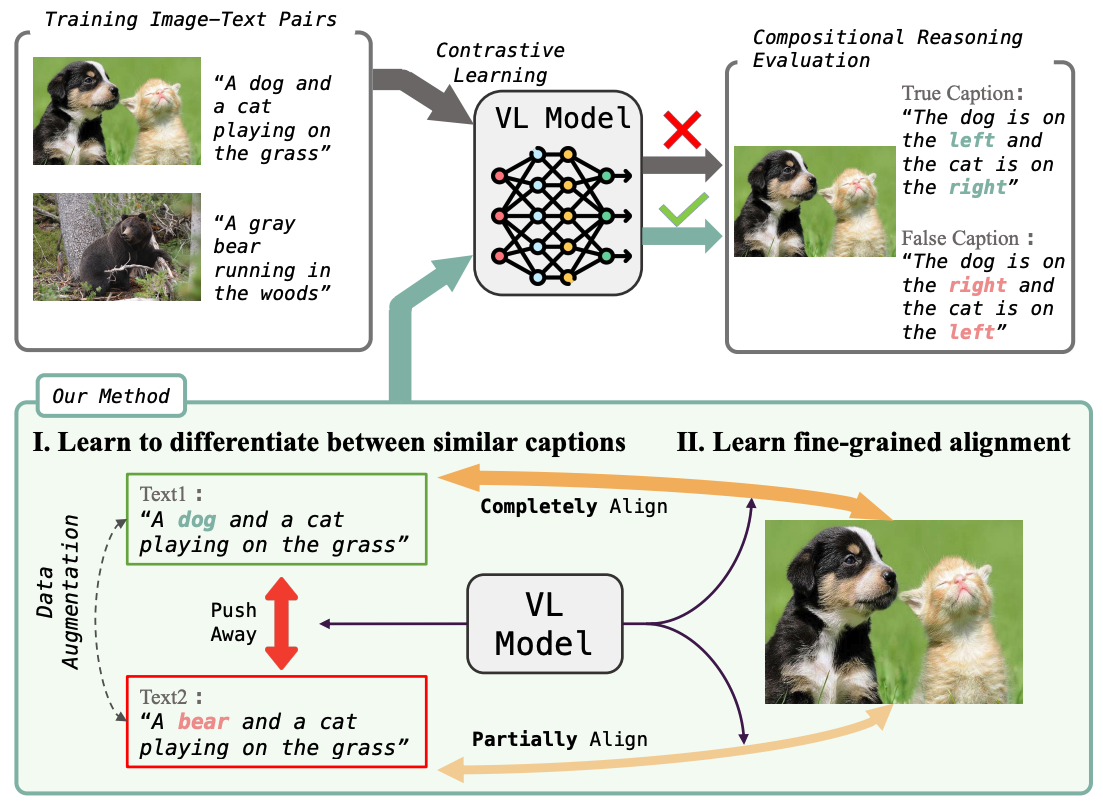

Contrasting Intra-Modal and Ranking Cross-Modal Hard Negatives to Enhance Visio-Linguistic Compositional Understanding

Le Zhang,

Rabiul Awal,

Aishwarya Agrawal

CVPR 2024

[ArXiv]

|

|

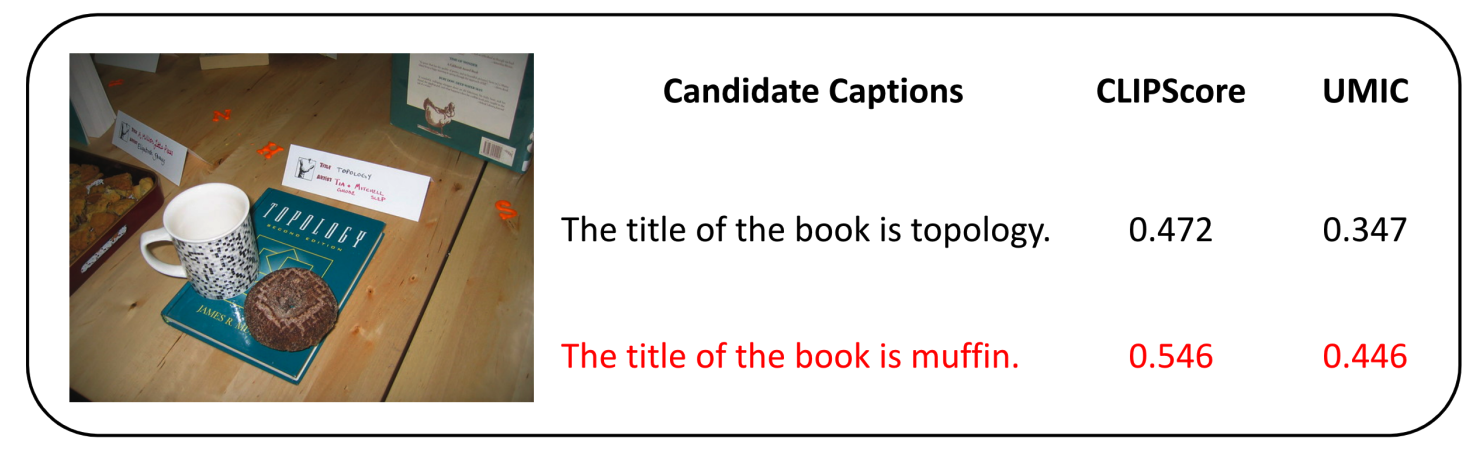

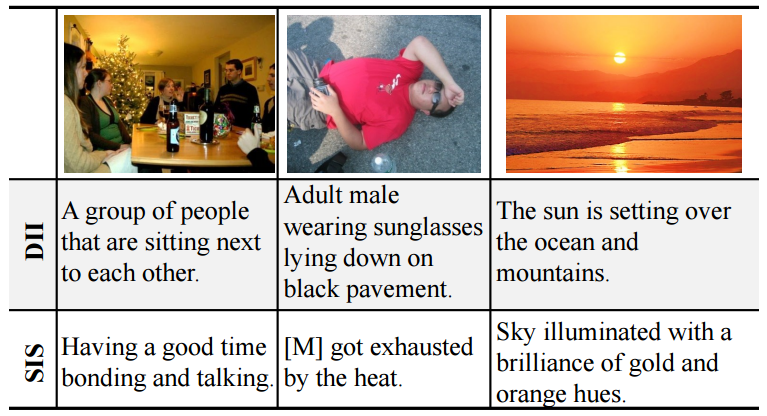

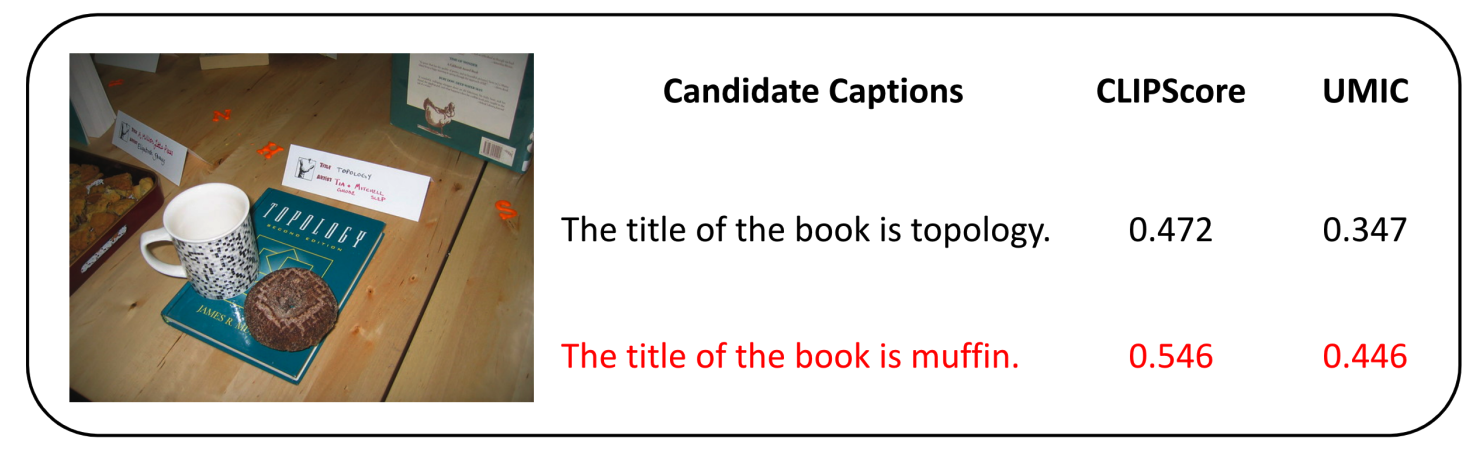

An Examination of the Robustness of Reference-Free Image Captioning Evaluation Metrics

Saba Ahmadi,

Aishwarya Agrawal

EACL Findings 2024

[ArXiv]

|

|

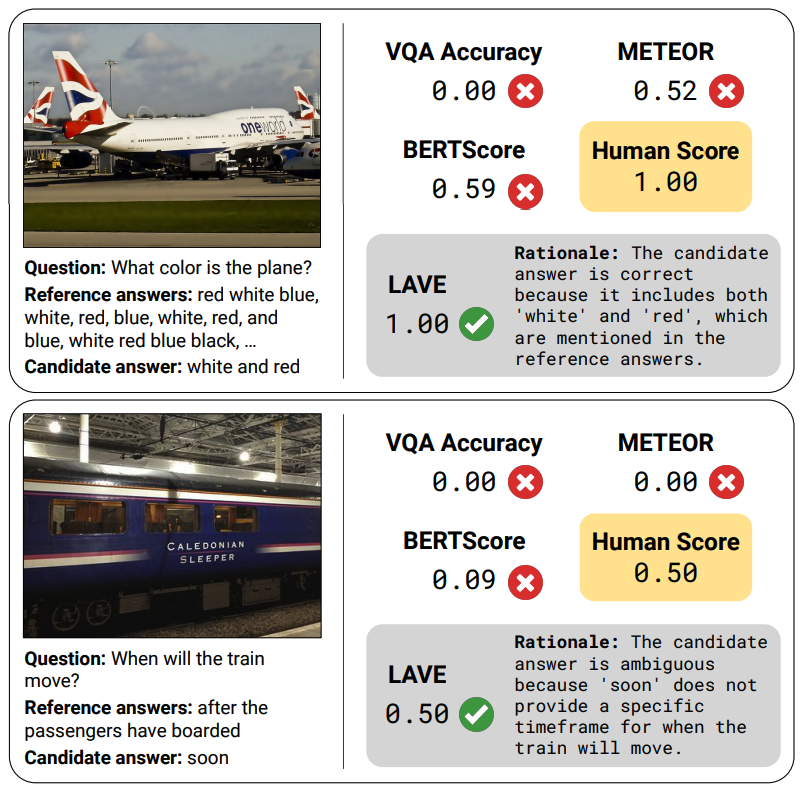

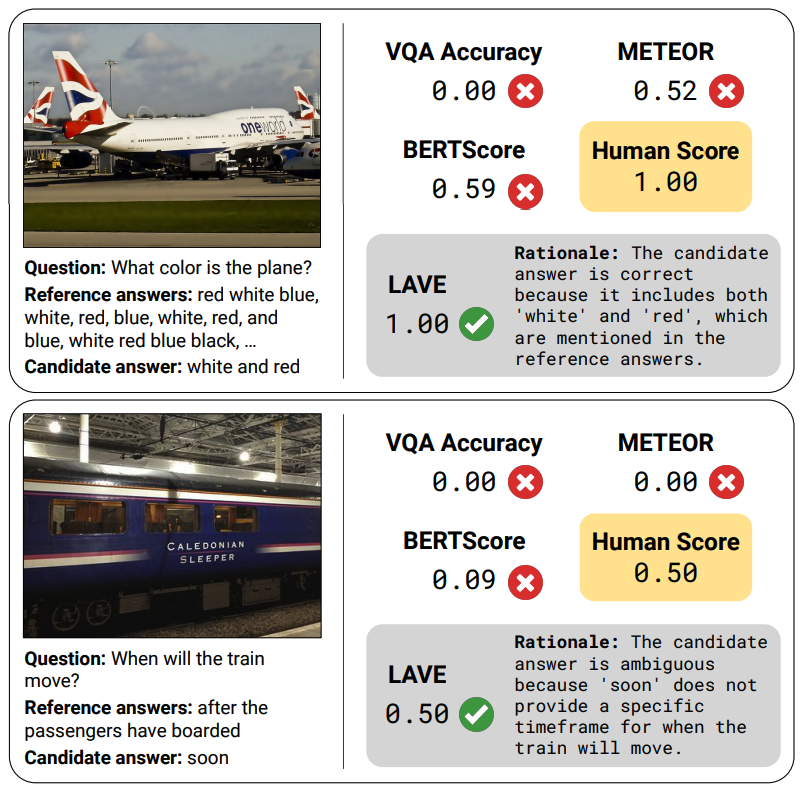

Improving Automatic VQA Evaluation Using Large Language Models

Oscar Mañas,,

Benno Krojer,

Aishwarya Agrawal

AAAI 2024

[ArXiv]

|

|

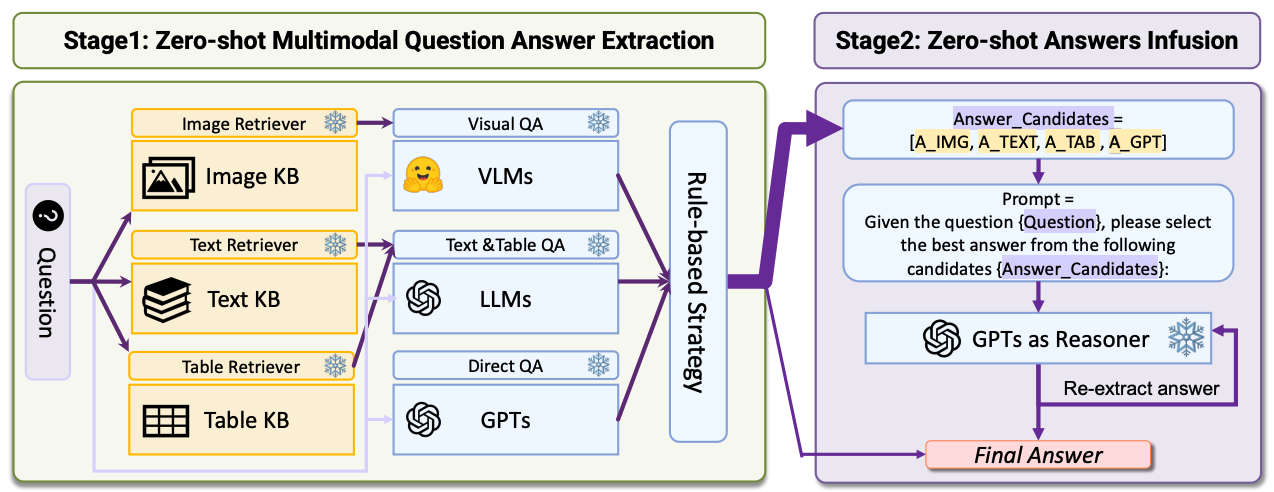

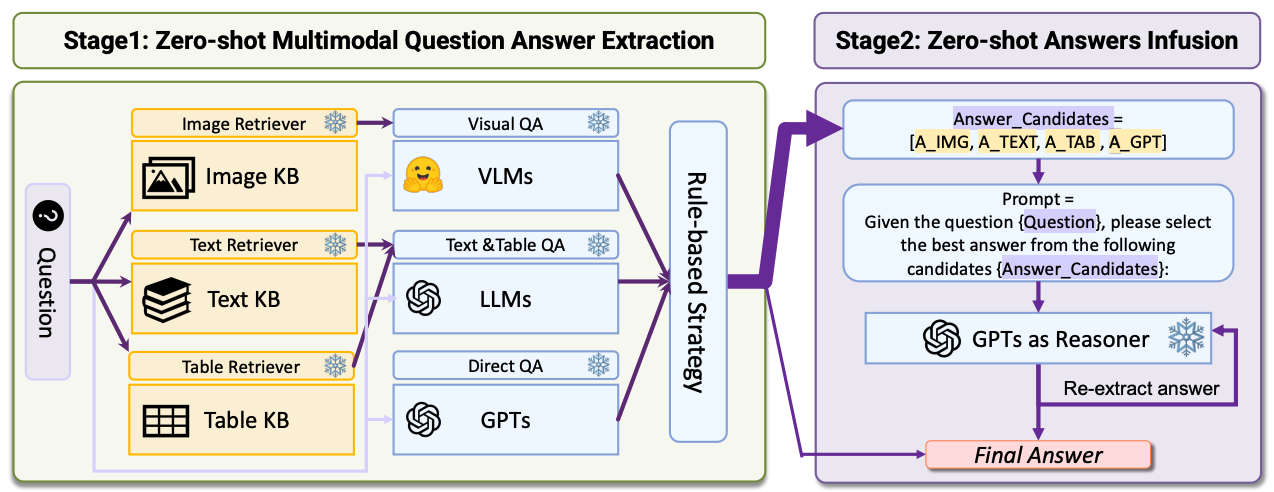

MoqaGPT: Zero-Shot Multi-modal Open-domain Question Answering with Large Language Model

Le Zhang,

Yihong Wu,

Fengran Mo,

Jian-Yun Nie,

Aishwarya Agrawal

EMNLP Findings 2023

[ArXiv]

|

|

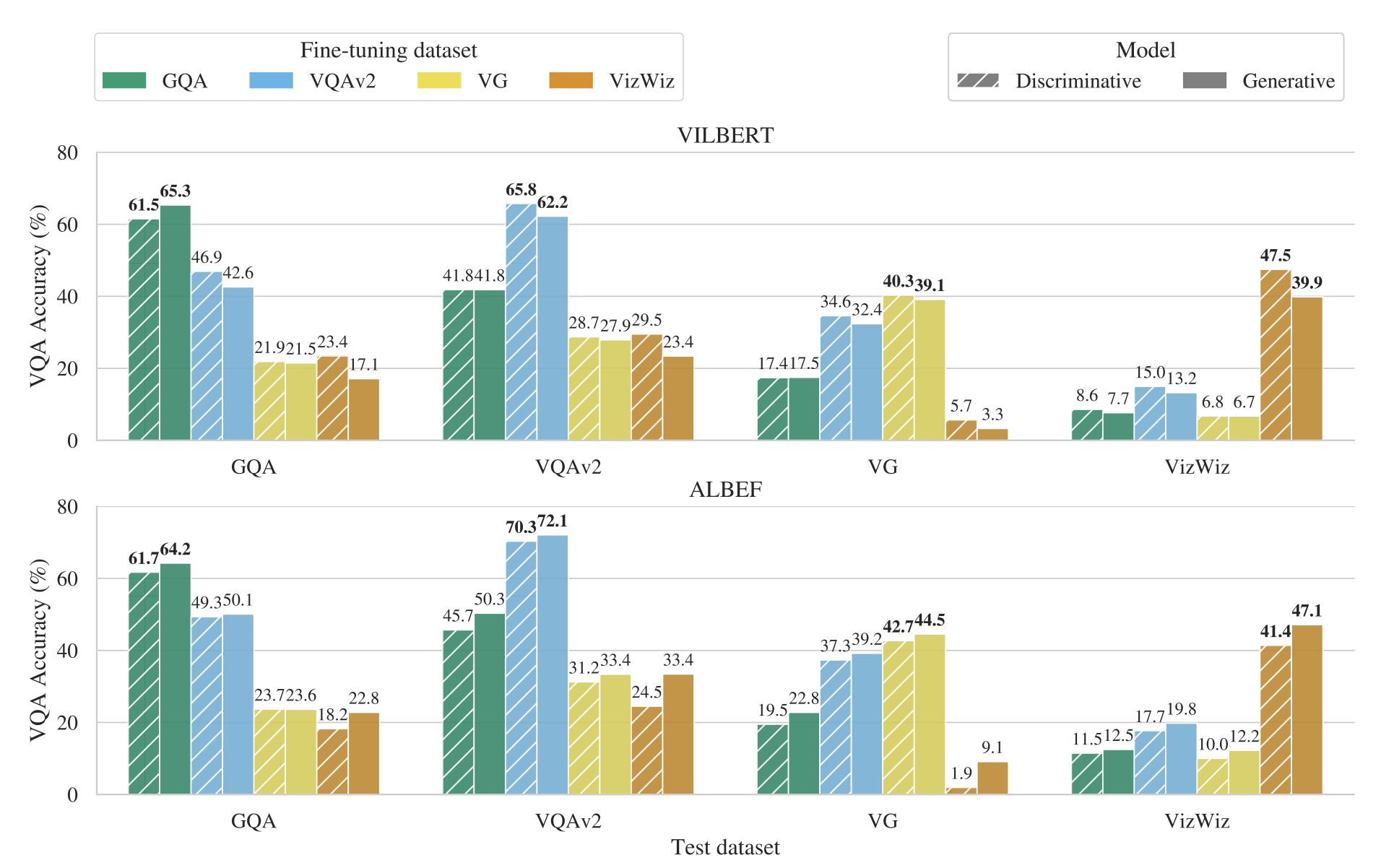

Measuring Progress in Fine-grained Vision-and-Language Understanding

Emanuele Bugliarello,

Laurent Sartran,

Aishwarya Agrawal,

Lisa Anne Hendricks,

Aida Nematzadeh

ACL 2023

[ArXiv]

|

|

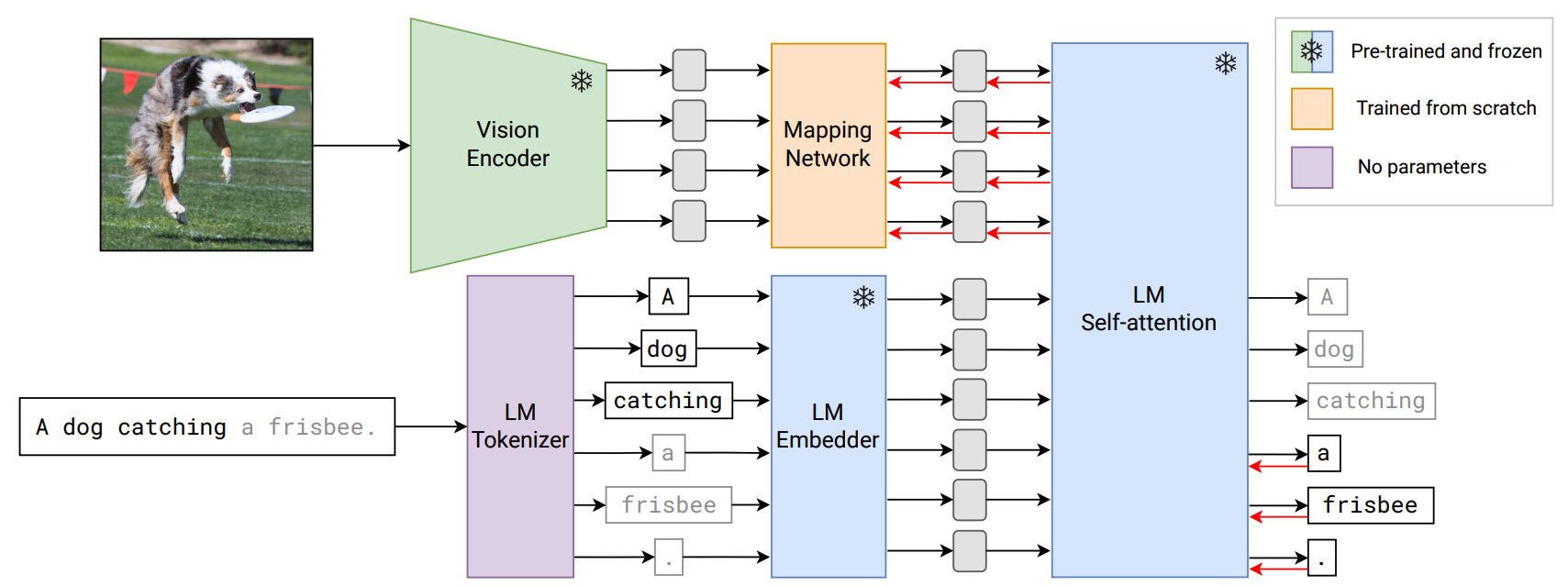

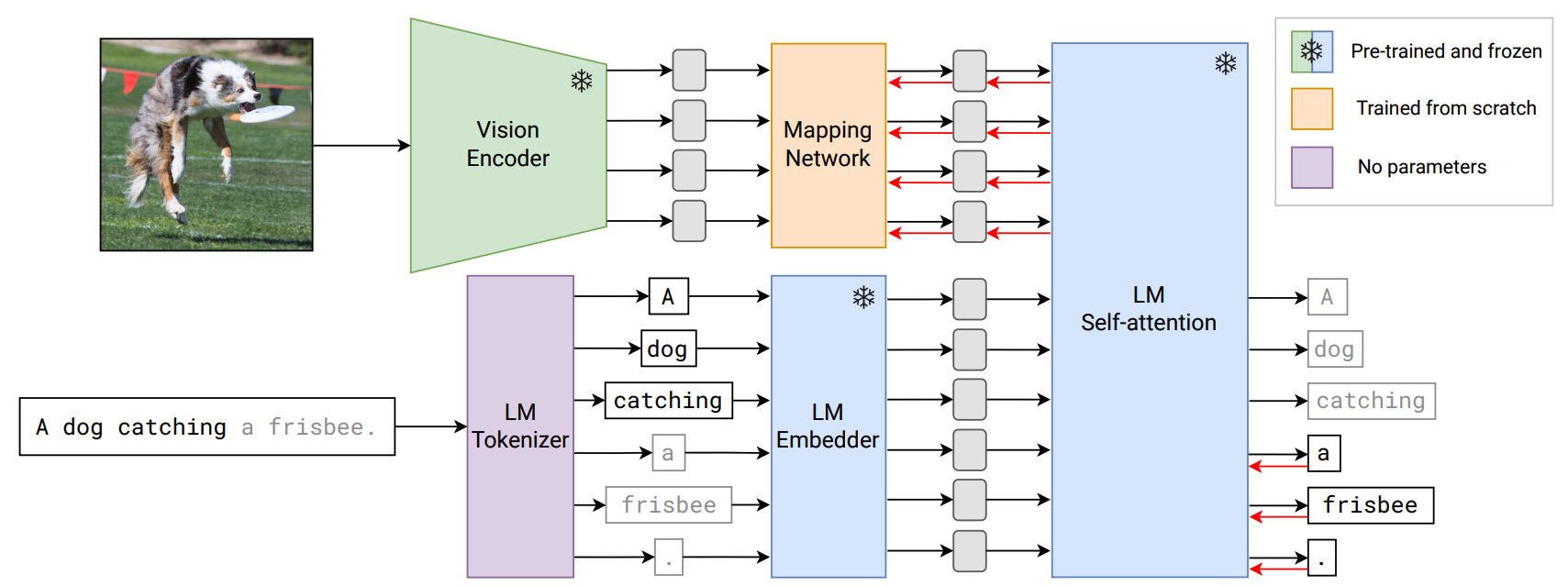

MAPL: Parameter-Efficient Adaptation of Unimodal Pre-Trained Models for Vision-Language Few-Shot Prompting

Oscar Mañas,

Pau Rodríguez*,

Saba Ahmadi*,

Aida Nematzadeh,

Yash Goyal,

Aishwarya Agrawal

*equal contribution

EACL 2023 [Oral]

[ArXiv | Code | Live Demo]

|

|

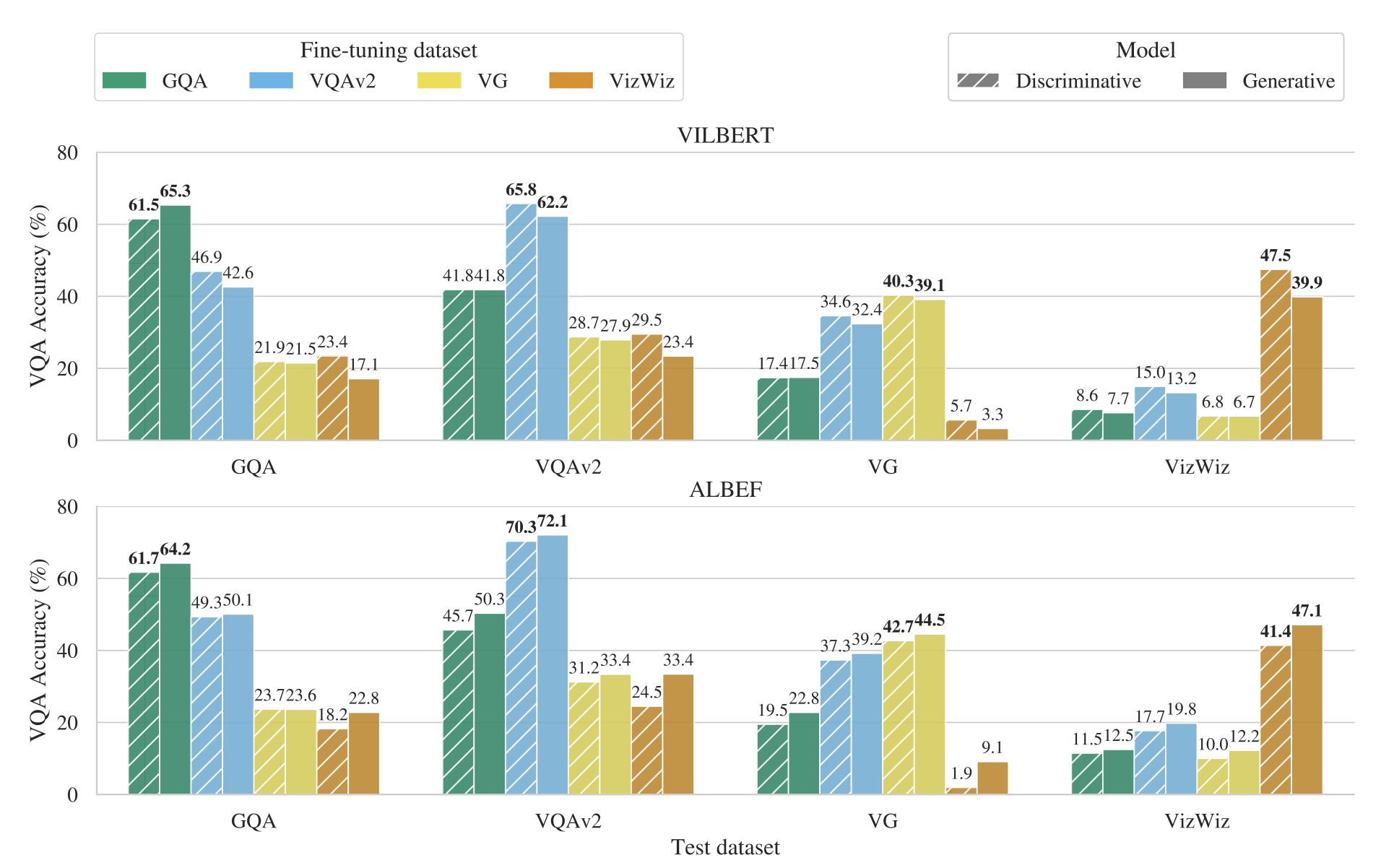

Rethinking Evaluation Practices in Visual Question Answering: A Case Study on Out-of-Distribution Generalization

Aishwarya Agrawal,

Ivana Kajić,

Emanuele Bugliarello,

Elnaz Davoodi,

Anita Gergely,

Phil Blunsom,

Aida Nematzadeh

(see paper for equal contributions)

EACL Findings 2023

[ArXiv]

|

|

Visual Question Answering and Beyond

Aishwarya Agrawal

PhD Dissertation, 2019

[PDF]

|

|

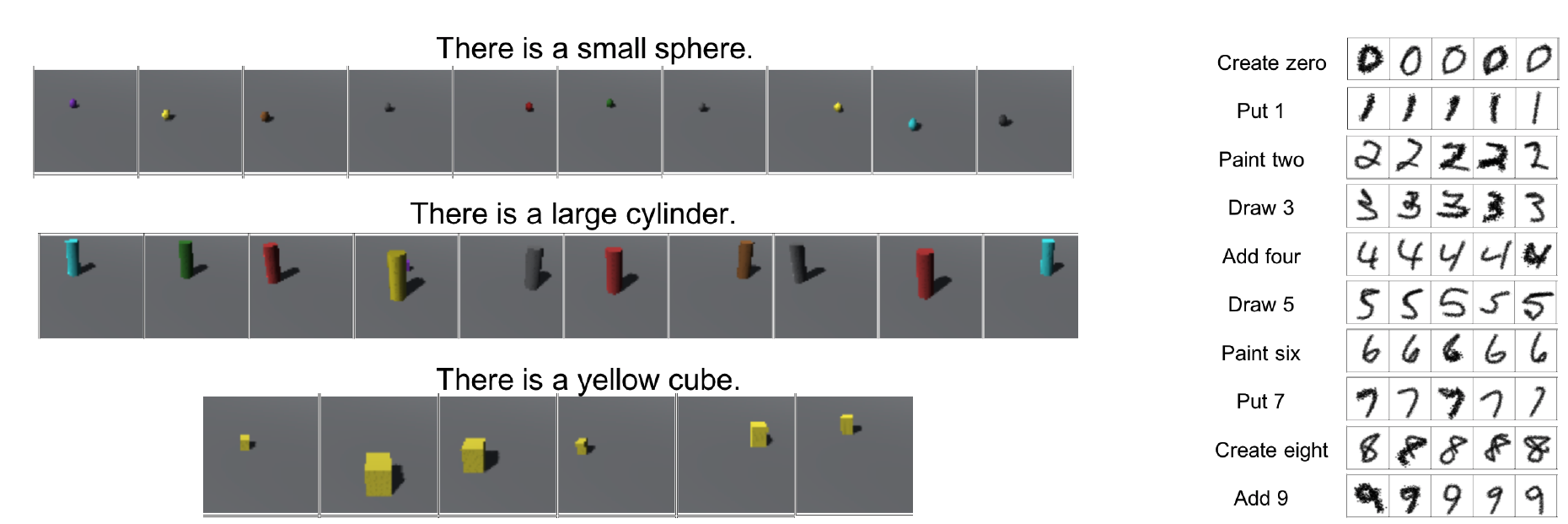

Generating Diverse Programs with Instruction Conditioned Reinforced Adversarial Learning

Aishwarya Agrawal,

Mateusz Malinowski,

Felix Hill,

Ali Eslami,

Oriol Vinyals,

Tejas Kulkarni

Visually-Grounded Interaction and Language Workshop, (spotlight), NIPS 2018

Learning by Instruction Workshop, NIPS 2018

[ArXiv]

|

|

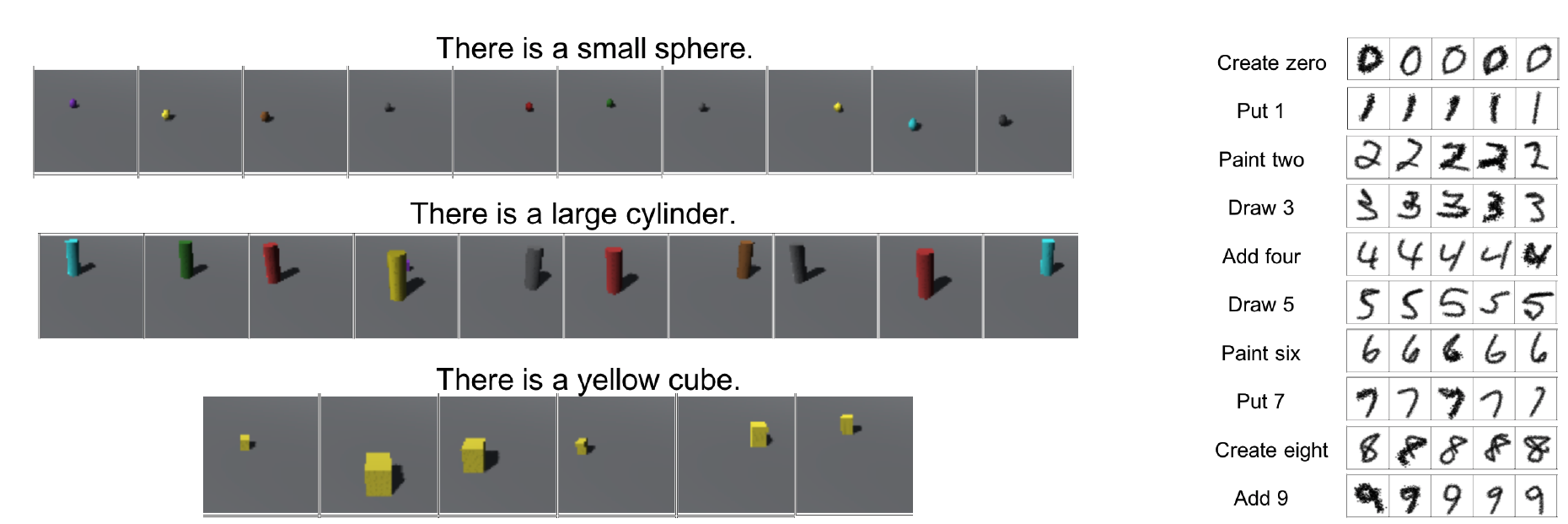

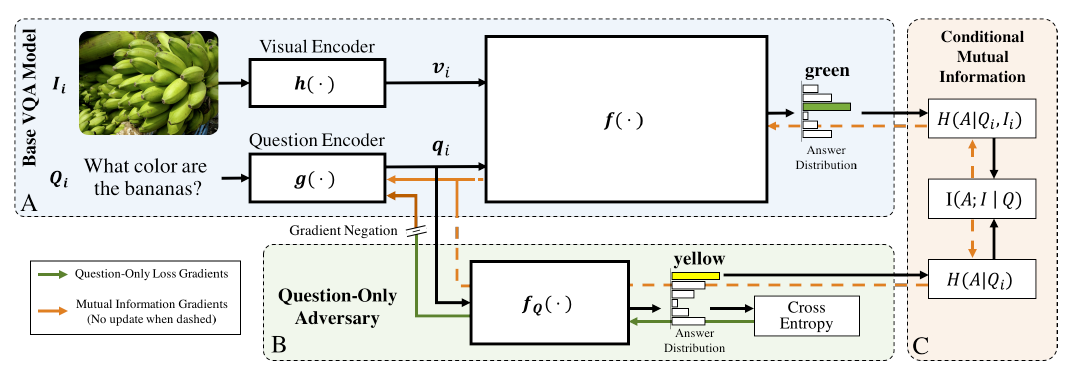

Overcoming Language Priors in Visual Question Answering with Adversarial Regularization

Sainandan Ramakrishnan,

Aishwarya Agrawal,

Stefan Lee

NIPS 2018

[ArXiv]

|

|

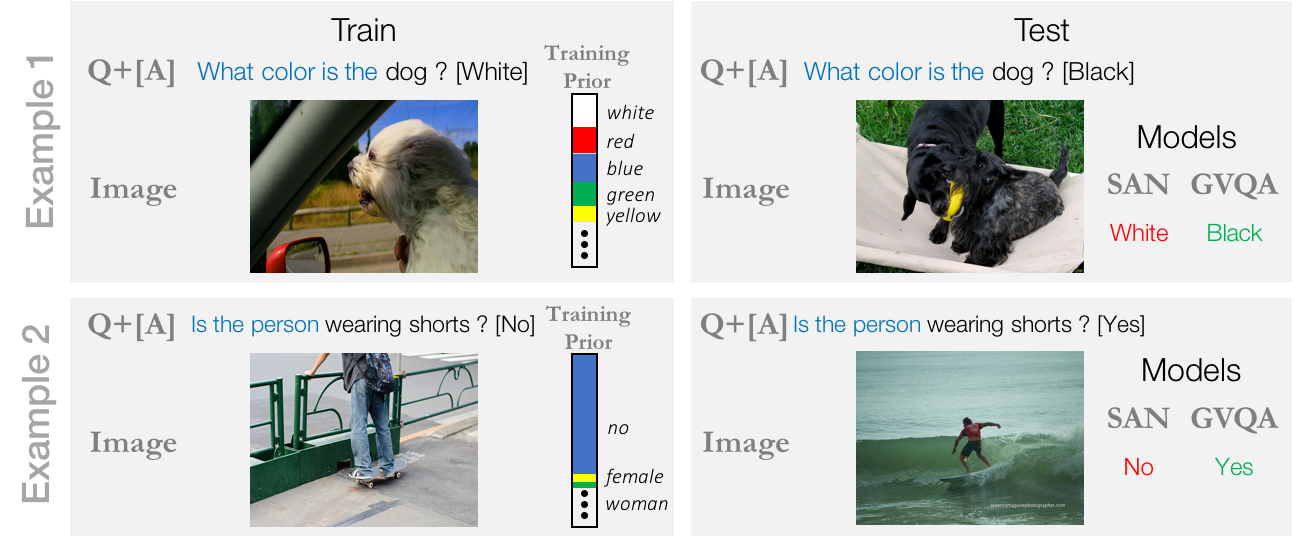

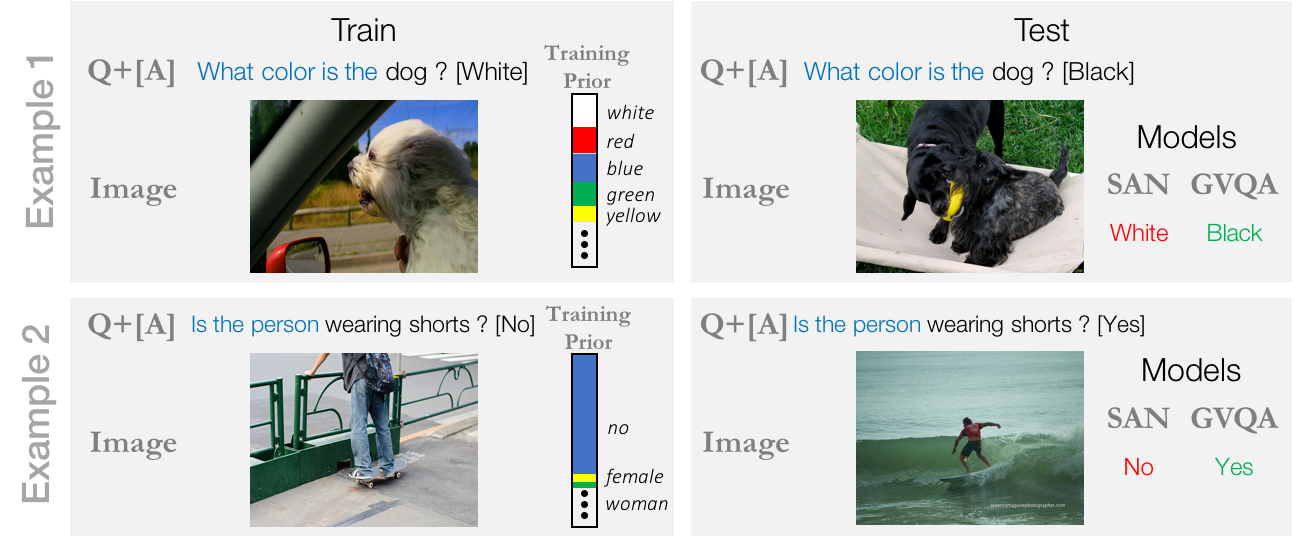

Don't Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering

Aishwarya Agrawal,

Dhruv Batra,

Devi Parikh,

Aniruddha Kembhavi

CVPR 2018

[ArXiv |

Project Page]

|

|

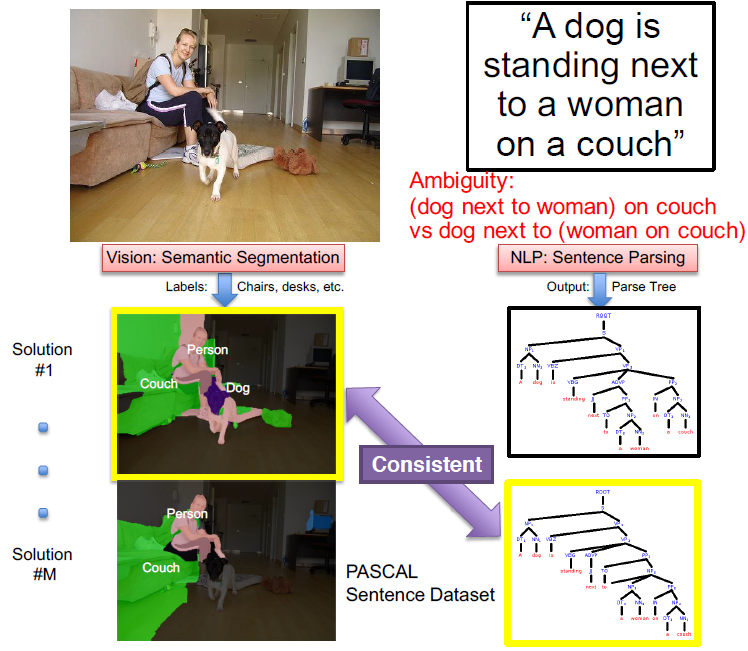

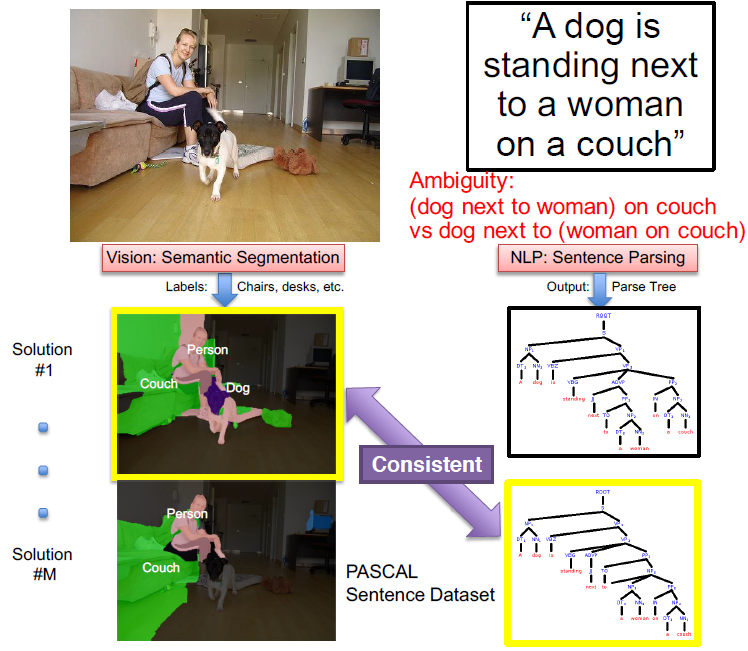

Resolving Language and Vision Ambiguities Together: Joint Segmentation & Prepositional Attachment Resolution in Captioned Scenes

Gordon Christie*,

Ankit Laddha*,

Aishwarya Agrawal,

Stanislaw Antol,

Yash Goyal,

Kevin Kochersberger,

Dhruv Batra

*equal contribution

Computer Vision and Image Understanding (CVIU) Journal, 2017

[Arxiv |

Project Page]

|

|

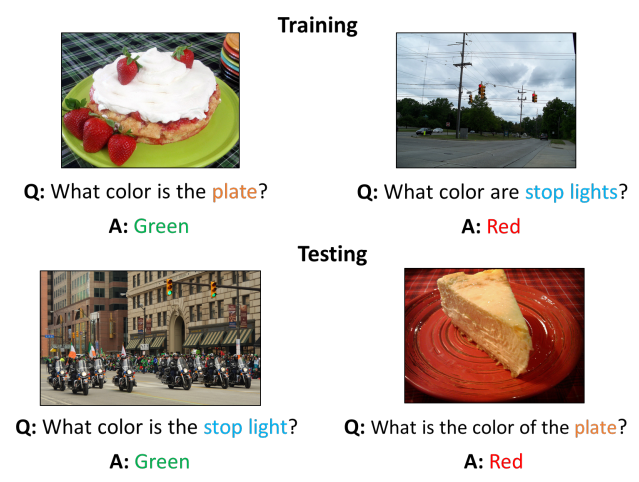

C-VQA: A Compositional Split of the Visual Question Answering (VQA) v1.0 Dataset

Aishwarya Agrawal,

Aniruddha Kembhavi,

Dhruv Batra,

Devi Parikh

arXiv preprint, arXiv:1704.08243, 2017

[ArXiv]

|

|

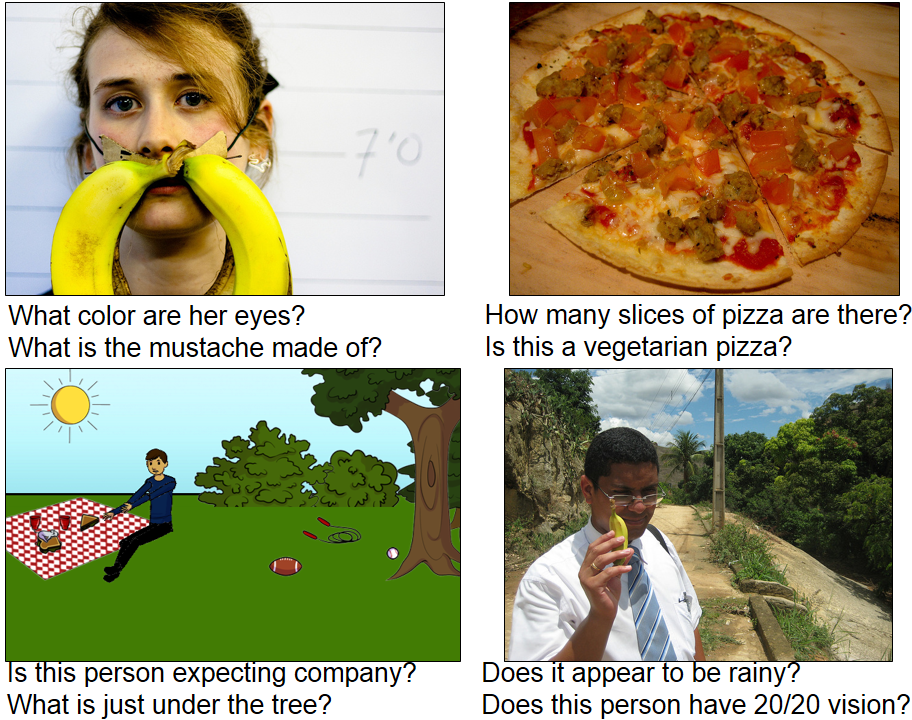

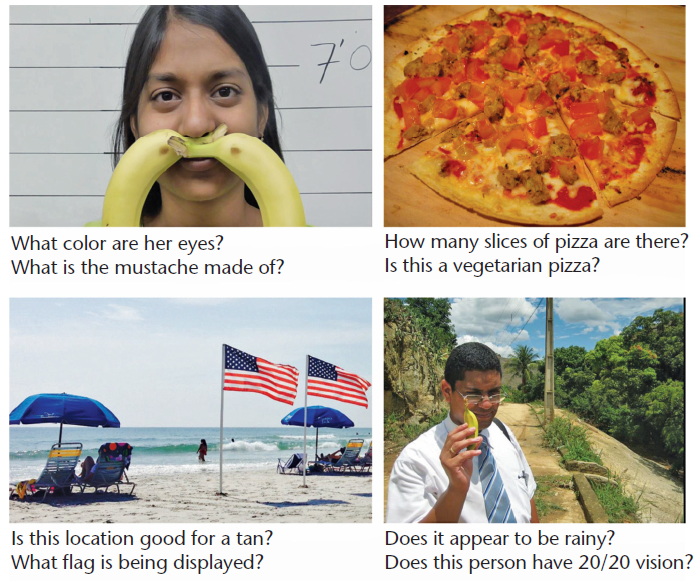

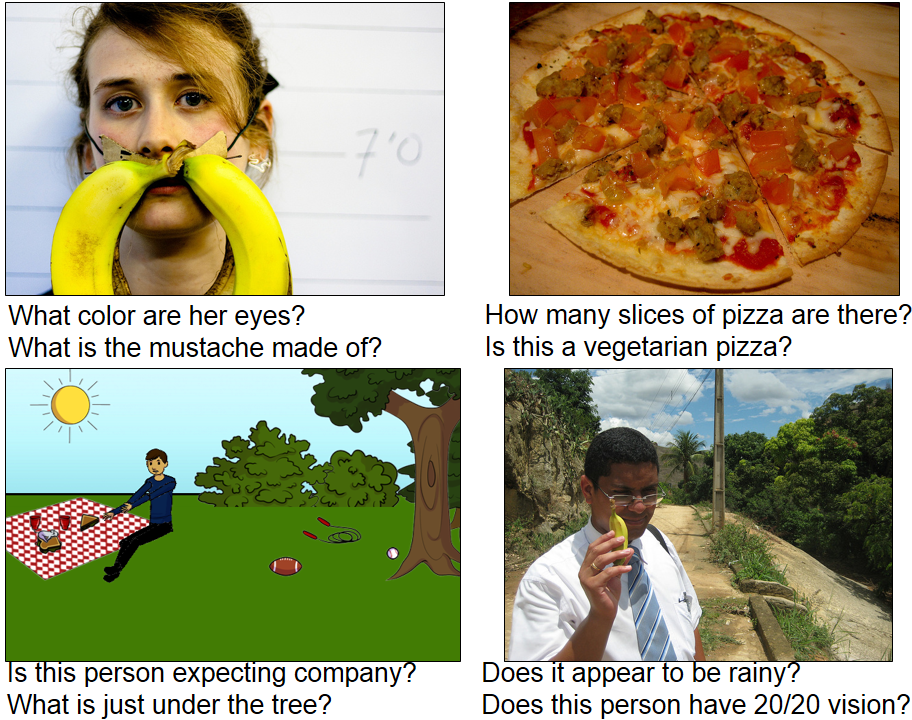

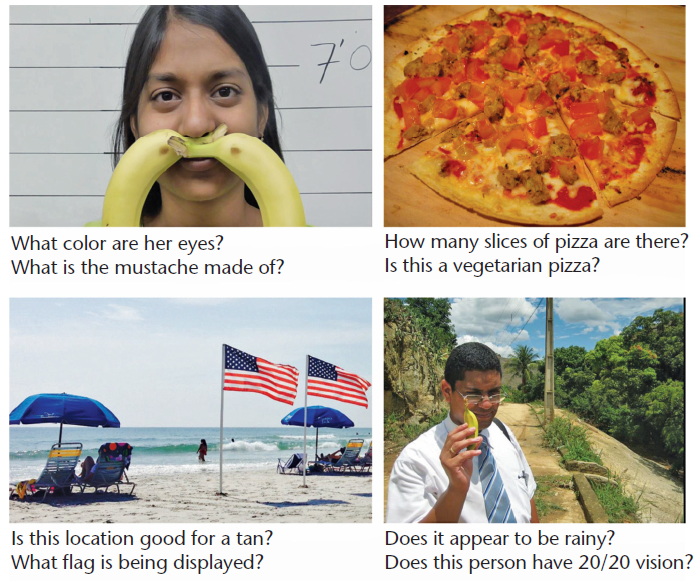

VQA: Visual Question Answering

Aishwarya Agrawal*,

Jiasen Lu*,

Stanislaw Antol*,

Margaret Mitchell,

Larry Zitnick,

Devi Parikh,

Dhruv Batra

*equal contribution

Special Issue on Combined Image and Language Understanding, International Journal of Computer Vision (IJCV), 2017

[ ArXiv

| visualqa.org (data, code, challenge)

| slides

| talk at GPU Technology Conference (GTC) 2016]

|

|

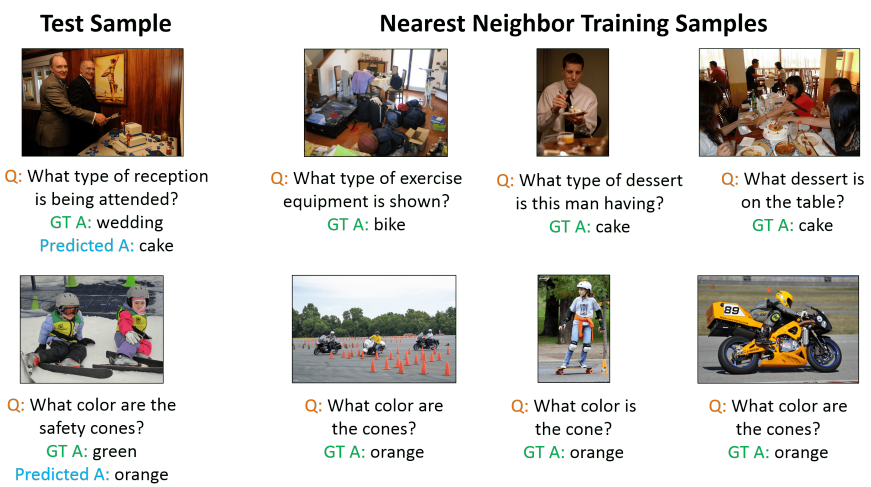

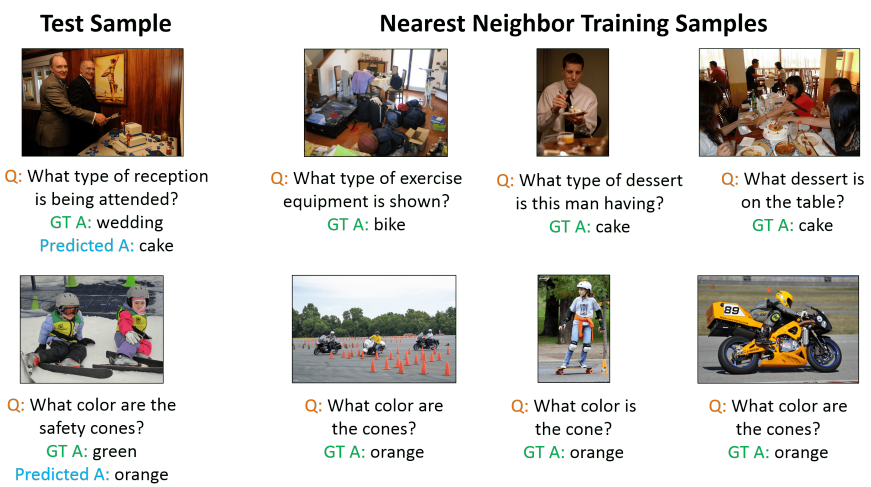

Analyzing the Behavior of Visual Question Answering Models

Aishwarya Agrawal,

Dhruv Batra,

Devi Parikh

EMNLP 2016

[Arxiv

| slides

| talk at Deep Learning Summer School, Montreal, 2016]

|

|

Resolving Language and Vision Ambiguities Together: Joint Segmentation & Prepositional Attachment Resolution in Captioned Scenes

Gordon Christie*,

Ankit Laddha*,

Aishwarya Agrawal,

Stanislaw Antol,

Yash Goyal,

Kevin Kochersberger,

Dhruv Batra

*equal contribution

EMNLP 2016

[Arxiv |

Project Page]

|

|

Measuring Machine Intelligence Through Visual Question Answering

Larry Zitnick,

Aishwarya Agrawal,

Stanislaw Antol,

Margaret Mitchell,

Dhruv Batra,

Devi Parikh

AI Magazine, 2016

[Paper |

ArXiv]

|

|

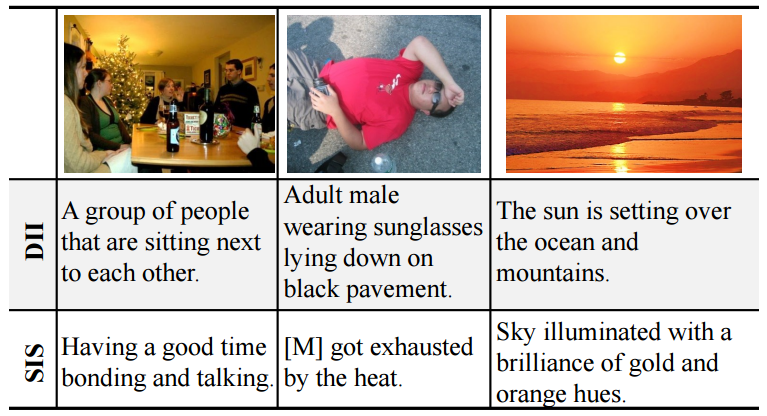

Visual Storytelling

Ting-Hao Huang,

Francis Ferraro,

Nasrin Mostafazadeh,

Ishan Misra,

Aishwarya Agrawal,

Jacob Devlin,

Ross Girshick,

Xiaodong He,

Pushmeet Kohli,

Dhruv Batra,

Larry Zitnick,

Devi Parikh,

Lucy Vanderwende,

Michel Galley,

Margaret Mitchell

NAACL 2016

[Arxiv, Project Page]

|

|

VQA: Visual Question Answering

Stanislaw Antol*,

Aishwarya Agrawal*,

Jiasen Lu,

Margaret Mitchell,

Dhruv Batra,

Larry Zitnick,

Devi Parikh

*equal contribution

ICCV 2015

[ ICCV Camera Ready Paper

| ArXiv

| ICCV Spotlight

| visualqa.org (data, code, challenge)

| slides

| talk at GPU Technology Conference (GTC) 2016]

|

|

A Novel LBP Based Operator for Tone Mapping HDR Images

Aishwarya Agrawal,

Shanmuganathan Raman

International Conference on Signal Processing and Communications (SPCOM-2014)

[Paper

|Poster]

|

|

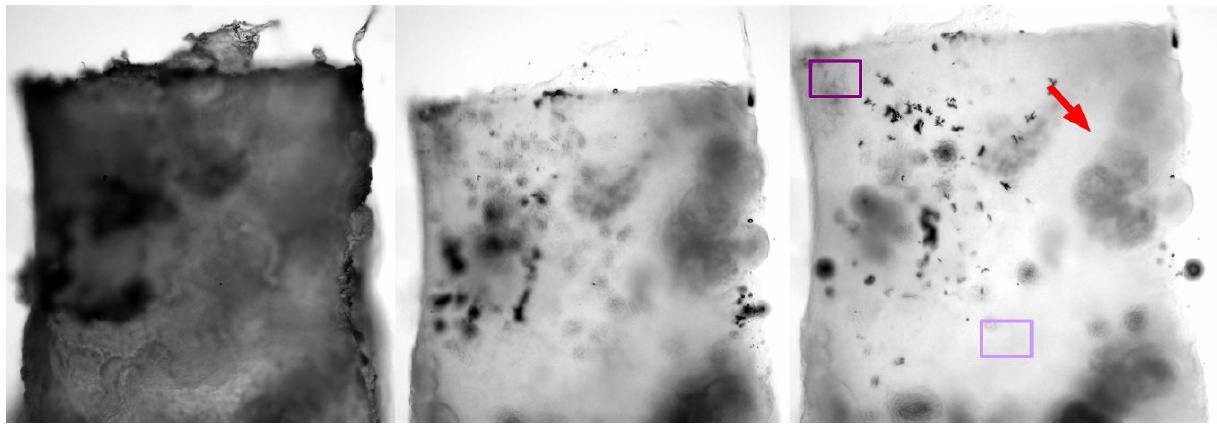

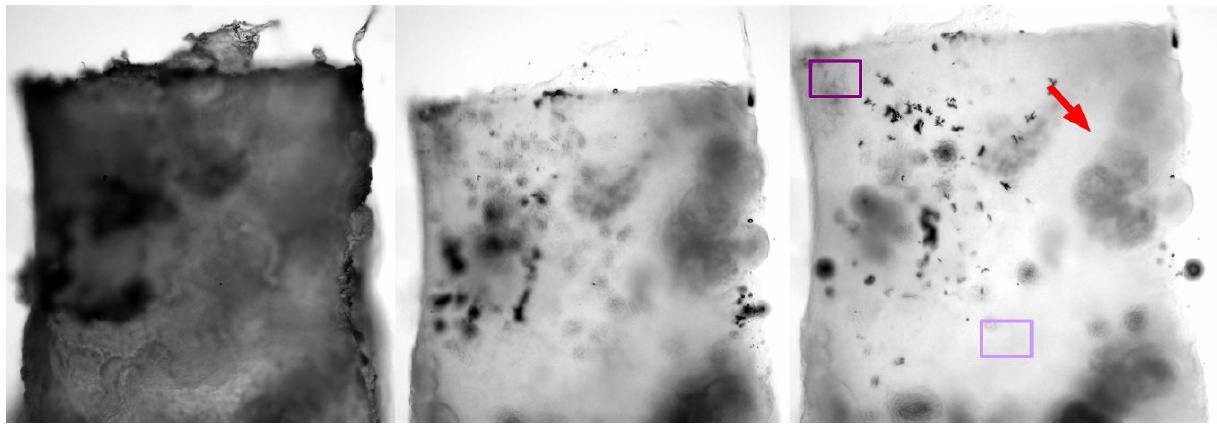

Optically clearing tissue as an initial step for 3D imaging of core biopsies to diagnose pancreatic cancer

Ronnie Das,

Aishwarya Agrawal,

Melissa P. Upton,

Eric J. Seibel

SPIE BiOS, International Society for Optics and Photonics, 2014

[Paper]

|